Last November, we took the latest big step in our Prime Air journey, launching our MK30 drone for commercial delivery service in the West Valley of Arizona’s Phoenix metro area and in College Station, Texas.

As we expected, delivering packages to customers across these different environments provided valuable operational insights. For example, we knew that we’d experience more dust in the air in Phoenix than we would in other places. While we’d already designed the drone to be safe in those environments, the data from these flights still gave us opportunities to learn and build even more safety measures into our drones. One example of that is in an altitude sensor that we use: We saw that environmental factors like dust can sometimes interfere with its readings.

While we never experienced a safety event with it in service, we learned that, in extremely rare cases, this could have caused the drone to receive an inaccurate reading about its altitude. Even though this is highly unlikely and we hadn’t encountered any actual safety issues in flights, we saw no reason to take risks. Safety is and always will be our top priority.

So, we took those learnings and decided to proactively enhance the fleet. And while we’re making those improvements, we voluntarily paused operations across the fleet. This is a normal part of our rigorous internal safety and engineering processes. We’ve now made the enhancements, rigorously tested them, and received FAA approval so we’re back up in the skies delivering to customers.

You can see the same kind of processes and thinking in how we developed the MK30 from the beginning.

New innovations require new ways of proving they’re safe and effective, so as we created the drone, we also created a way of testing it. It’s a process that requires a blend of creativity and rigor, because while the FAA’s specific regulations are limited in comparison to its rules for airplanes, it still demands convincing and overwhelming evidence of a new flier’s safety.

“That is one of the most challenging, but also most rewarding, parts of our job,” says Phil Hornstein, who leads system safety for Prime Air, focusing on the drone’s design and engineering. “Our aim at Prime Air is to establish and meet a safety bar that is higher than what is required by regulators.”

Doing that requires identifying weaknesses, while also providing the evidence required to get regulatory approval. Unexpected tools for the job include Cozy Coupe kiddie cars, construction cranes, helicopters, and more. It also requires running lots and lots of flights: 5,166 to date, to be exact, totaling 908 hours in the air.

As a result of that testing—and the certification from the Federal Aviation Administration that ensures the MK30 can safely integrate into the airspace and safely conduct deliveries to our customers—we have complete confidence in the underlying safety of the drone.

Here’s how we did it.

Standards and challenges

To test a design like the MK30 and ensure that it’s safe, we push it to and beyond its limits in safe environments, over and over again. That allows us to find potential issues and fix them, and understand where the limits of the technology are, so we can be sure we won’t reach them in real-world scenarios.

Because the drone industry doesn’t have decades of established safety protocols to build on, the team looks to the places that do, adapting them as necessary. Standard ARP4761, for example, is a handy breakdown of how to assess the safety of an aircraft. You just have to account for the various differences between a delivery drone and a multi-million-dollar aircraft hauling hundreds of people over thousands of miles.

They also drew ideas from the automotive industry’s expertise in testing smaller vehicles, the self-driving car’s approach to autonomous creations, and military standards for system safety. Following these protocols helped the team do things like identify and design out points of single failure, such as using the same power source to supply both a component and its backup.

Goals set, the next step was to think through every hurdle along the way. Those fall into three general categories. Functional hazards are things that can go wrong with the drone’s software or hardware. The human factors piece accounts for any mistakes an operator might make (a helpfully limited area in this highly automated system). And the environmental bucket catches everything the drone’s liable to encounter, from winds and hail to toys laying in a customer’s backyard.

Injected risk

Those simulated tests are key to building the confidence it takes to take a drone aloft for a series of real, midair challenges. For every given hazard in that lengthy list, the Amazon team creates a test to recreate the problem and see whether their drone can handle it.

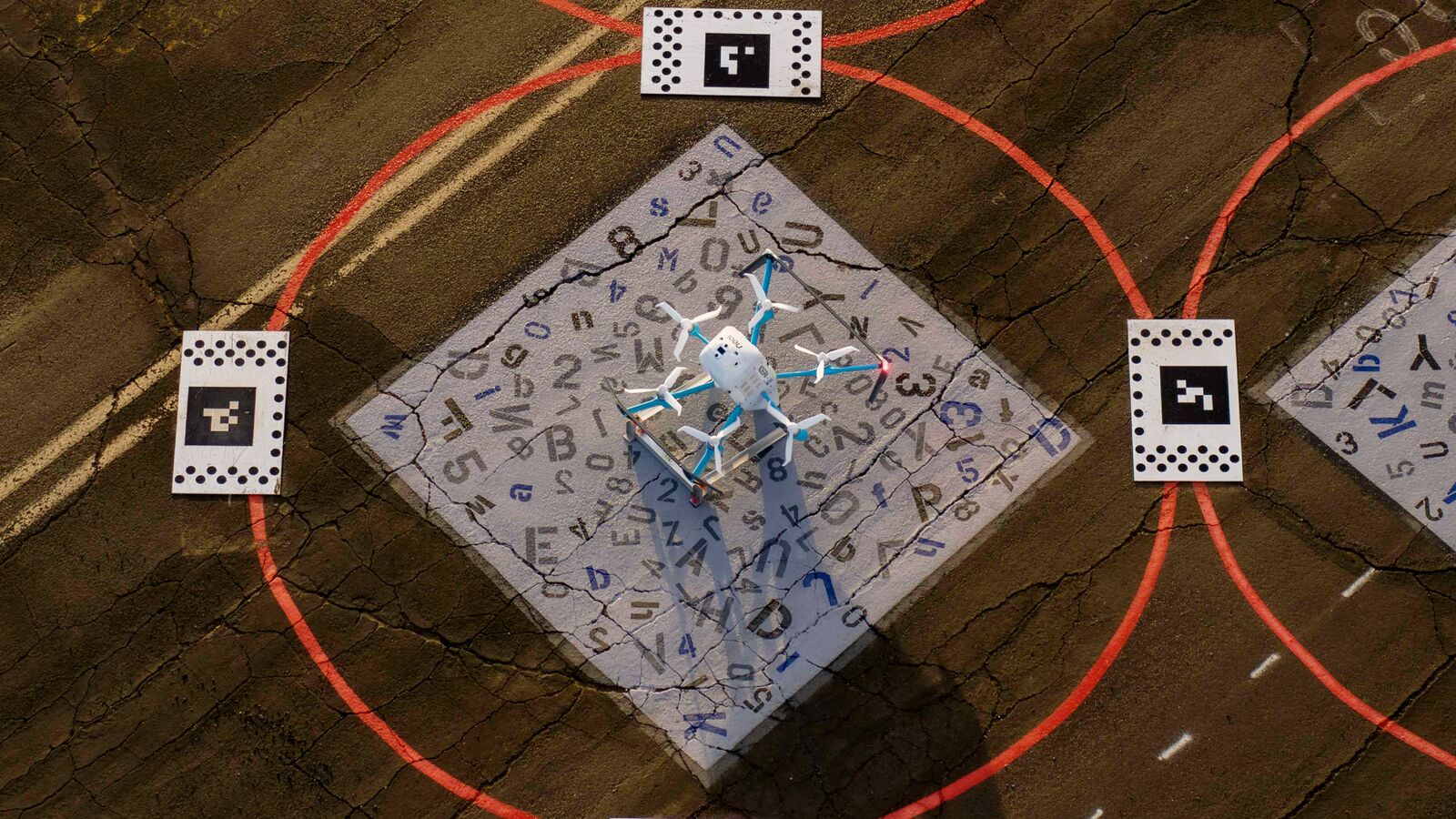

In a “MEP out test,” the team uses software to stop the MK30’s motor, its electronic speed controller, or a propeller, to verify it can stay in flight, safely return to the launch site, and land at a designated pad that’s reserved for such scenarios. In switchover testing, they “inject” a failure that knocks out the drone’s primary flight computer, to verify it can switch to a backup that can take control and safely get the drone back to base. They run both these tests over and over, blitzing the drone at different points of a mission, in horizontal and vertical flight, right after launch and at the moment of delivery.

Safely integrating our drone with other aircraft in the same airspace is one of the most important safety imperatives for the program, and requires skilled operators who understand the mechanics of drone flight. Our operators run through tests to ensure teams know and follow our standard operating procedures for pre- and post-flight checks.

Then there’s obstacle avoidance. Before a flight, Prime Air’s Airspace and Mission Orchestration System generates a high-fidelity model of the operating area. That model creates flight paths that avoid known obstacles like buildings, bridges, and power lines.

But, knowing they can’t guarantee the landing spot in a customer’s yard will be clear of obstacles, the team created a test regime that ensures the drone can understand when it shouldn’t deliver a package (it delivers while hovering 12 feet up). This involved things like putting a kid’s toy on the landing spot, attached to a rope so the team’s designated “yanker” could haul it out of the way to act as a moving obstacle.

As a rule, the operations team keeps the drones to specific airspace that should be clear (it stays away from airports), and monitors the signals many aircraft use to broadcast their position. The drone’s ability to spot and dodge an aircraft may be its last line of defense, but that makes it all the more important to test, and to do so in as realistic a way as possible.

“When we test the detect and avoid capability, we’re flying an airplane at the drone,” says Adam Martin, who runs Prime Air’s flight test and safety organizations. “We’ll fly a helicopter at it.” And they’ll do it a whole bunch of times, with different routes, angles, heights, speeds, and scenarios. “We ran many, many tests of that type,” he says.

Forward flight

While we’re confident in the testing we do before we get FAA certification and start making real-world flights, we don’t stop when we hit those milestones. Testing is a game of one-upmanship, where each evolution in the design or use of a product requires a similar evolution in how it’s proven safe, and where we’re always asking ourselves “what other situation could we possibly encounter, and how do we get ahead of it?”

So we learn more, study more, and get ready to ace the next test.

This article has been updated since its original publication on February 27, 2025.

Trending news and stories

- Inside Project Kuiper's Florida hub: Preparing satellites for Amazon's space network

- Everything you need to prepare for your in-person Amazon interview

- How I went from a college graduate to an Amazon manager with a 7-week program

- How to watch the live-action ‘How to Train Your Dragon,’ now available on Prime Video