Simple. The name of the first Amazon Web Services (AWS) service, Amazon S3, begins with that word: Simple Storage Service.

"It's ironic because what we were trying to do, store data on the internet—and do it really, really well—was not so simple," said Amazon Chief Technology Officer Dr. Werner Vogels, recalling the earliest days of what we now call the cloud. "For customers it had to be ‘simple,’ but designing and building S3 wasn’t.”

Today we squirrel away and access all kinds of data in the cloud with very little effort or thought to how it happens. It's easy to forget what things were like 15 years ago. The iPhone had yet to launch. Mariah Carey was on top of the pop music charts in the U.S. (again). Comedy hit 30 Rock premiered. Facebook was just expanding beyond college students.

The internet was a growing part of many people's lives, and so the idea that there should be an easy way to store and access everything from thumbnail images to audio files and spreadsheets online made sense to technologists, if not to everyone who was fiddling with a computer. It made even more sense to all the startup entrepreneurs and would-be entrepreneurs who had to spend the equivalent of thousands of dollars, in many cases tens of thousands of dollars, on their own datacenters before they could even see if an idea had legs.

The concept of internet storage was therefore something that people were expecting, even clamoring for. For it to really succeed, however, it had to be equal parts secure, durable (in the sense that data was never lost), always available, and reliable. All of that, and also fairly easy to use and affordable.

Others had tried to hit all those engineering notes and build their own internet storage, especially within large enterprises that handled lots of scanned documents. It usually went nowhere because the systems ended up being either too hard to use or just too expensive to make sense. Another reason building an internet storage system was not a slam-dunk was you couldn't just go out and take a typical file system structure and slap it on the internet.

The early S3 team had to puzzle out what was really needed for storage on the internet, and then invent a way to build that out.

The design trend of the time was to add as many features to a software suite as possible. But during an early review with Jeff Bezos and Andy Jassy to dig into how exactly to pull that off their internet storage idea, the discussion reached a counter conclusion. In contrast to all the over-engineered software of the era, the S3 team decided to go in the opposite direction. They would build a storage system that did what it needed to do, and do it really, really well.

Over days and pints of Hammerhead ale at the Six Arms in Seattle's Capitol Hill neighborhood and bagels in the Seattle Convention Center, the team sketched out key elements for the distributed system that would become S3.

Among the 10 technical design principles they created, they emphasized things like eliminating bottlenecks and single points of failure through decentralization. They assumed that hardware would break as a normal mode of operation, and they engineered ways to keep the system sound even when things failed. Anticipating what would become a full-fledged cloud platform, they designed with an eye toward the services that were yet to be built. That meant S3 shouldn't try to be a single service that did everything for everyone but rather a component that could be used as a building block for other services to come.

The final design principle–and really the organizing principle for the whole effort–had a Yoda-like flair that developers could internalize and express in their work. They wrote, "The system should be made as simple as possible (but no simpler)." Let that sink in a bit.

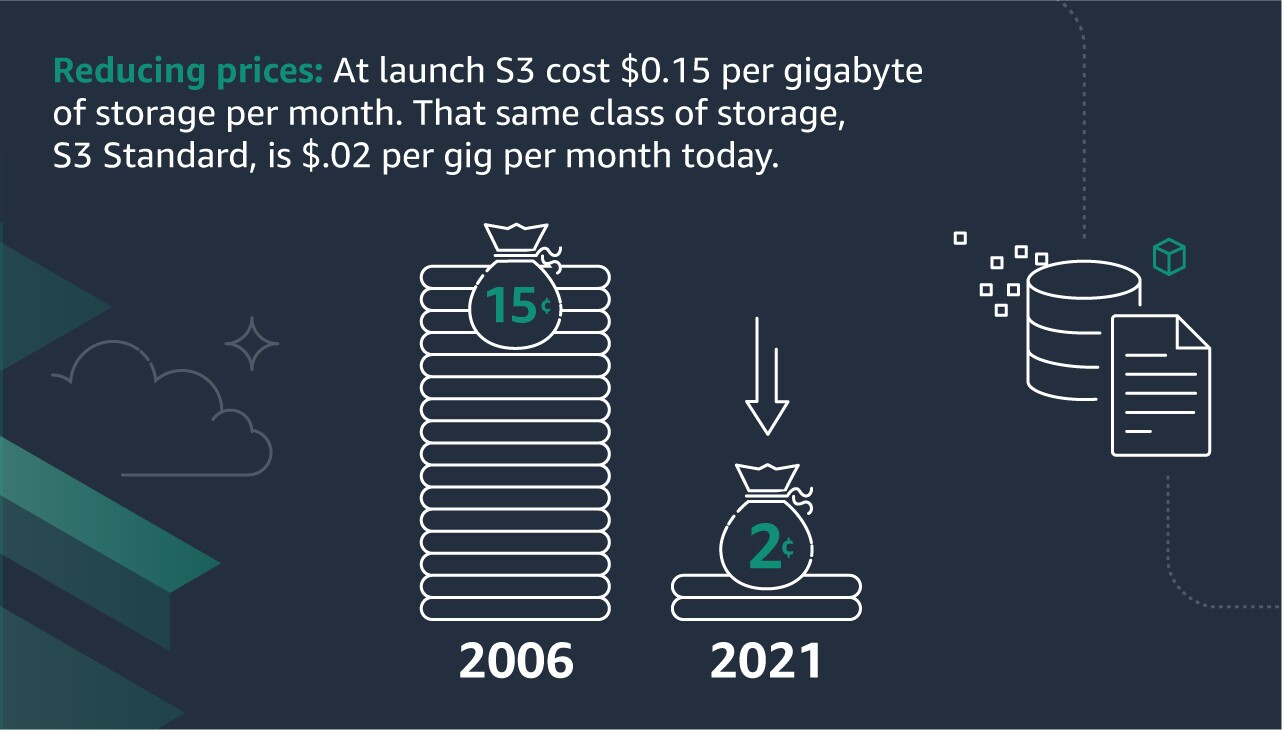

What they landed on was a completely new system that used "objects," "buckets," and "keys" to offer secure internet storage that developers could actually use and afford at $0.15 per gigabyte of storage per month (the price for what is now called S3 Standard storage has since dropped to about $0.02 per gig per month).

How it worked

One analogy for what the S3 team built using their objects, buckets, and keys system is the classic library. It's not a perfect analogy, but it gets close. Think of it this way:

One analogy for what the S3 team built using their objects, buckets, and keys system is the classic library. It's not a perfect analogy, but it gets close. Think of it this way:

Objects. In our S3 library, books are objects. Objects can be any form of data: a photo, a piece of music, a document, a call center exchange. The data itself is opaque to S3, but objects also contain metadata that describe the object, things like the content type and date last modified.

Buckets. Objects are stored in buckets. In the library analogy, buckets exist like the art history or geology section in a library. Buckets are how you classify and organize all the objects inside. Buckets can contain a single object or literally millions of objects or topics. There is no limit to the number of objects a bucket can hold. In that sense, buckets are infinitely expandable shelves in our library that refer to what's inside in whatever way makes sense to the customer.

Keys. Think of the keys as our library's card catalogue. Keys (these are not encryption keys that you may have heard of) contain a bit of unique information about each of those objects within a bucket. Every object in a bucket has exactly one key. Because a bucket and key together uniquely identify each object, S3 can be thought of as a basic data map between "bucket + key" and the object itself. You use keys to head to the right bucket and find the right object.

Library cards. Unlike your average public library, not just anyone can come strolling in and start rummaging through S3 buckets. Access is strictly controlled, and only when permission is granted can a bucket be made public or become available to a particular set of people or another service. In other words, you need the right library card to get in.

From the beginning, S3 was designed to scale. And while the S3 team anticipated that people would be keen for an online storage service, no one clearly saw the rocket-like growth in data that was about to happen and how it would be part of so many aspects of our lives. One early S3 team member likened it to what people must have been thinking while watching the Wright brothers try out their flying machines. You could see that planes and flight would exist in our world—the Wrights had just shown it was possible—but you could never have imagined hopping on a commercial airline flight.

Similarly, for most of us the idea of streaming movies on our phones in 2006 would have seemed like magic, never mind collaborating with colleagues instantaneously, swapping stories with family and friends via video, or seeing the detail of a Martian landscape and hearing the sounds of Mars from 300 million miles away. How we generate, collect, and analyze data of all kinds, and the insights we are able to draw from it, has moved into the center of much of what we do.

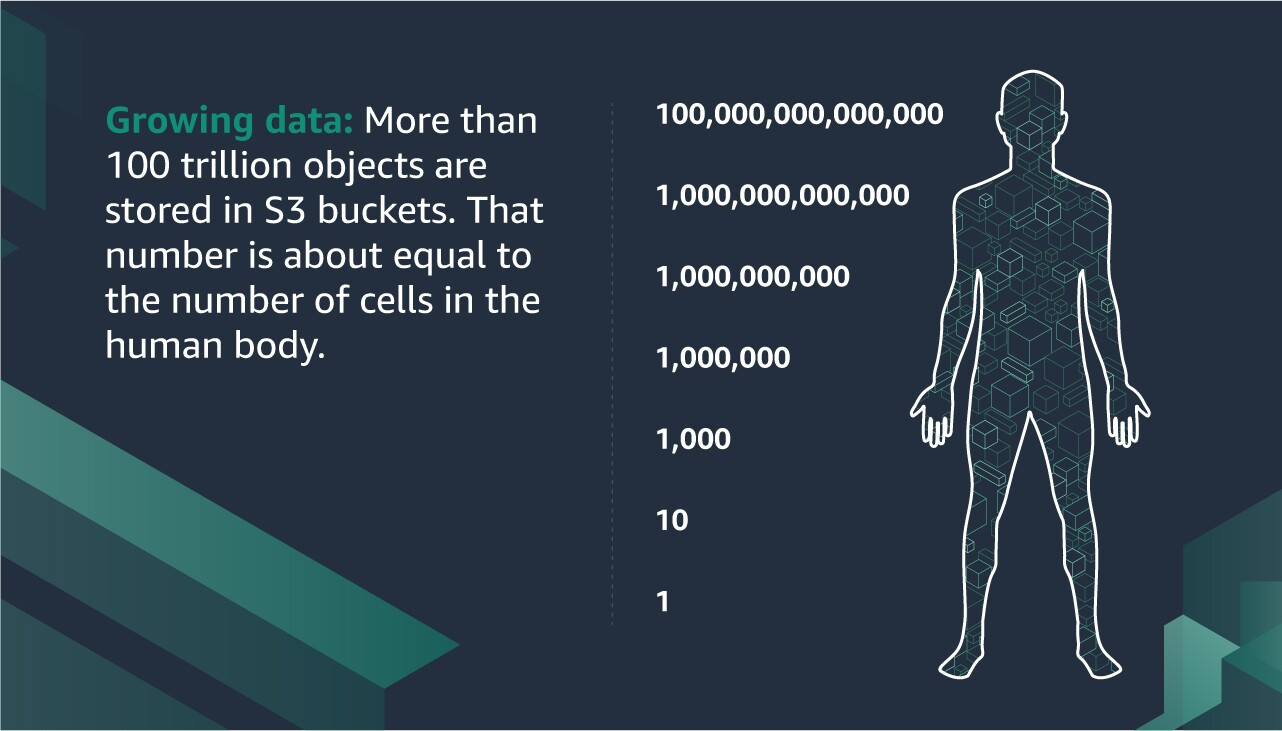

S3 has made that order-of-magnitude leap over and over again in the intervening 15 years. Today, there are more than 100 trillion objects residing in S3 buckets. While this doesn't make it easy to wrap our heads around, 100 trillion is about equal to the number of synapses in the human brain or cells in the human body. And data growth isn't slowing. Current estimates suggest that the amount of data created by all our activity over the next three years will be more than the data created over the past 30 years. And, of course, we will need a place to put it.

While S3 may have scaled beyond the team’s initial imaginations, the fundamental approach they engineered is still at the core of the service. It's still based on objects, buckets, and keys. It’s still laser focused on security, durability, availability, and reliability. And even as it continues to evolve, the system is still made as simple as possible (but no simpler).

This year is the 15th anniversary of AWS. Learn more about the history of the cloud.

Trending news and stories