December 19, 2024: This article has been updated to reflect the general availability of Llama 3.3 70B from Meta, which offers advancements in model efficiency and performance optimization.

Llama 3.3

The latest model from technology company Meta—Llama 3.3 70B—is now available in Amazon Bedrock and Amazon SageMaker AI, as well as via Amazon Elastic Compute Cloud (Amazon EC2) using AWS Trainium and Inferentia, and represents advancements in both model efficiency and performance optimization. The new, text-only model offers improvements in reasoning, math, general knowledge, instruction following, and tool use in comparison to the earlier version. Further, Llama 3.3 70B delivers similar performance to Llama 3.1 405B, while requiring only a fraction of the computational resources.

The model’s comprehensive training results in robust understanding and generation capabilities across diverse tasks, including multilingual dialogue use cases, text summarization and analysis, and following instructions.

September 25, 2024

Llama 3.2

The next generation of Llama models from technology company Meta, including its first ever multimodal models, are available today on Amazon Web Services (AWS) in Amazon Bedrock and Amazon SageMaker AI. They are also available via Amazon Elastic Compute Cloud (Amazon EC2) using AWS Trainium and Inferentia.

The Llama 3.2 collection builds on the success of previous Llama models to offer new, updated, and highly differentiated models, including small and medium-sized vision LLMs that support image reasoning and lightweight, text-only models optimized for on-device use cases. The new models are designed to be more accessible and efficient, with a focus on responsible innovation and safety. Llama Guard 3 Vision, fine-tuned for content safety classification, is also available today in Amazon SageMaker JumpStart. Here are the highlights:

Meta’s first multimodal vision models, Llama 3.2 11B Vision and Llama 3.2 90B Vision

- Largest models in the Llama 3.2 collection.

- Support image understanding and visual reasoning use cases.

- Excellent for analyzing visual data, such as charts and graphs, to deliver more accurate answers and insights.

- Well suited for image captioning, visual question answering, image-text retrieval, document processing, and multimodal chatbots, as well as long-form text generation, multilingual translation, coding, math, and advanced reasoning.

Llama 3.2 1B and Llama 3.2 3B, optimized for edge and mobile devices

- Lightweight, text-only.

- Processing can be performed in the cloud or locally, so responses feel instantaneous.

- Ideal for highly personalized applications spanning text generation and summarization, sentiment analysis, customer service applications, rewriting, multilingual knowledge retrieval, and mobile AI-powered writing assistants.

Llama Guard 3 11B Vision: fine-tuned for content safety classification

- Only available in Amazon SageMaker JumpStart

- Llama Guard 3 11B Vision can be used to safeguard content for both LLM inputs (prompt classification) and LLM responses (response classification).

- Specifically designed to support image reasoning use cases and optimized to detect harmful multimodal (text and image) prompts and text responses to these prompts.

According to Meta, Llama 3.2 models have been evaluated on over 150 benchmark datasets, demonstrating competitive performance with leading foundation models. Similar to Llama 3.1, all of the models support a 128K context length and multilingual dialogue use cases across eight languages, spanning English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

Visit the AWS News blog for more information on Llama 3.2, and read on for detail on the existing capabilities of Meta’s collection of Llama models, which Llama 3.2 builds on, and how AWS customers have already been using Llama models.

July 23, 2024

Llama 3.1

The Llama 3.1 models are a collection of pretrained and instruction fine-tuned large language models (LLMs) in 8B, 70B, and 405B sizes that support a broad range of use cases. They are particularly suited for developers, researchers, and businesses to use for text summarization and classification, sentiment analysis, language translation, and code generation.

According to Meta, Llama 3.1 405B is one of the best and largest publicly available foundation models (FMs), setting a new standard for generative AI capabilities. It is particularly well suited for synthetic data generation and model distillation, which improves smaller Llama models in post-training. The models also provide state-of-the-art capabilities in general knowledge, math, tool use, and multilingual translation.

All of the new Llama 3.1 models demonstrate significant improvements over previous versions, thanks to vastly increased training data and scale. The models support a 128K context length, an increase of 120K tokens from Llama 3. This means 16 times the capacity of Llama 3 models and improved reasoning for multilingual dialogue use cases in eight languages: English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

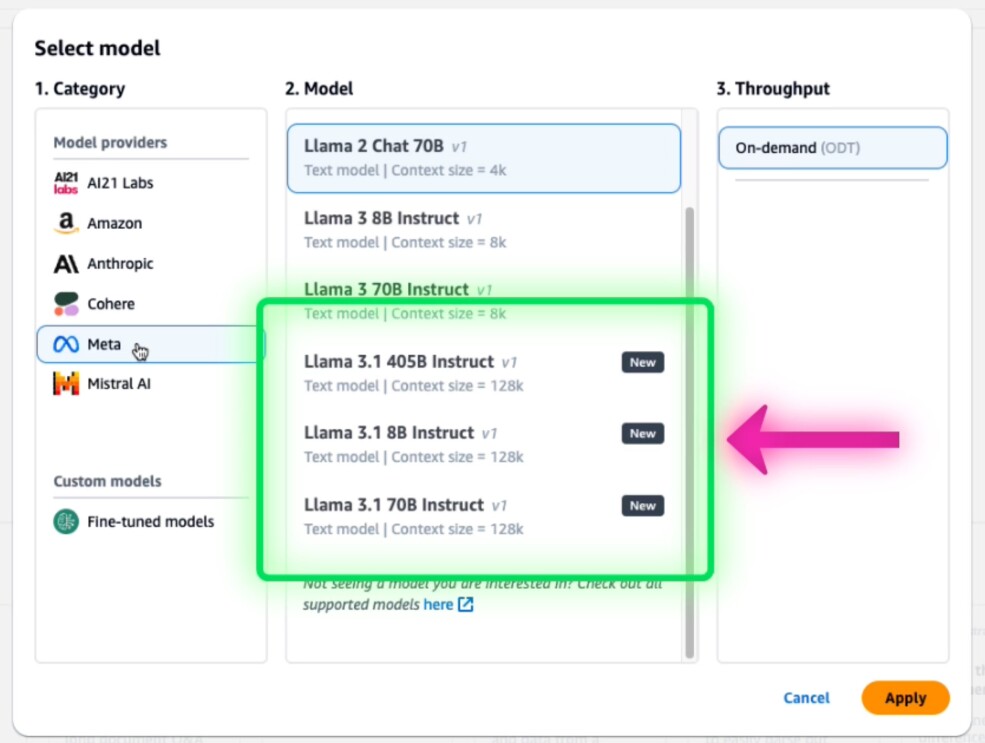

Llama 3.1 models in Amazon Bedrock

Llama 3.1 models in Amazon BedrockOther improvements include an enhanced grasp of linguistic nuances, meaning Llama 3.1 shows improved contextual understanding and can handle complexities more effectively. The models can also access more information from lengthy passages of text to make more informed decisions, as well as leverage richer contextual data to generate more subtle and refined responses.

"We have a longstanding relationship with Meta and are excited to make their most advanced models available to AWS customers today," said Matt Garman, CEO of AWS. "Customers love the ability to customize and optimize Llama models specific to their individual use cases, and with Llama 3.1 now available on AWS, customers have access to the latest state-of-the-art models for building AI applications responsibly.”

For the past decade, Meta has been focused on putting tools into the hands of developers, and fostering collaboration and advancements among developers, researchers, and organizations. Llama models are available in a range of parameter sizes, enabling developers to select the model that best fits their needs and inference budget. Llama models on AWS open up a world of possibilities because developers don’t need to worry about scalability or managing infrastructure. AWS offers a simple turnkey way to get started using Llama.

“Open source is the key to bringing the benefits of AI to everyone,” said Mark Zuckerberg, founder and CEO, Meta. “We've been working with AWS to integrate the entire Llama 3.1 collection of models into Amazon SageMaker JumpStart and Amazon Bedrock, so developers can use AWS's comprehensive capabilities to build awesome things like sophisticated agents that can tackle complex tasks.”

Llama 3.1 405B

- Ideal for enterprise applications and research and development (R&D)

- Use cases include: long-form text generation, multilingual and machine translation, coding, tool use, enhanced contextual understanding, and advanced reasoning and decision-making

Llama 3.1 70B

- Ideal for content creation, conversational AI, language understanding, and R&D

- Use cases include: text summarization, text classification, sentiment analysis and nuanced reasoning, language modeling, code generation, and following instructions

Llama 3.1 8B

- Ideal for limited computational power and resources, as well as mobile devices

- Faster training times

- Use cases include: text summarization and classification, sentiment analysis, and language translation

Amazon Bedrock, which offers tens of thousands of customers secure, easy access to the widest choice of high-performing, fully managed LLMs and other FMs, as well as leading ease-of-use capabilities, is the easiest place for customers to get started with Llama 3.1.

Customers seeking to access Llama 3.1 models and leverage all of AWS’s security and features can easily do this in Amazon Bedrock with a simple API, and without having to manage any underlying infrastructure. At launch, customers can take advantage of the responsible AI capabilities provided by Llama 3.1. These models will work with Amazon Bedrock’s data governance and evaluation features, including Guardrails for Amazon Bedrock and Model Evaluation on Amazon Bedrock. Customers will also be able to customize the models using fine-tuning, which is coming soon.

Amazon SageMaker is the best place for data scientists and ML engineers to pre-train, evaluate, and fine-tune FMs with advanced techniques, and deploy FMs with fine-grain controls for generative AI use cases that have stringent requirements on accuracy, latency, and cost. Today, customers can discover and deploy all Llama 3.1 models in just a few clicks via Amazon SageMaker JumpStart. With fine-tuning coming soon, data scientists and ML engineers will be able to take building with Llama 3.1 one step further—for example, by adapting Llama 3.1 on their specific datasets in mere hours.

“Amazon Bedrock is the easiest place to quickly build with Llama 3.1, leveraging industry-leading privacy and data governance, evaluation features, and built-in safeguards. Amazon SageMaker—with its choice of tools and fine-grain control—empowers customers of all sizes and across all industries to easily train and tune Llama models to power generative AI innovation on AWS,” Garman said.

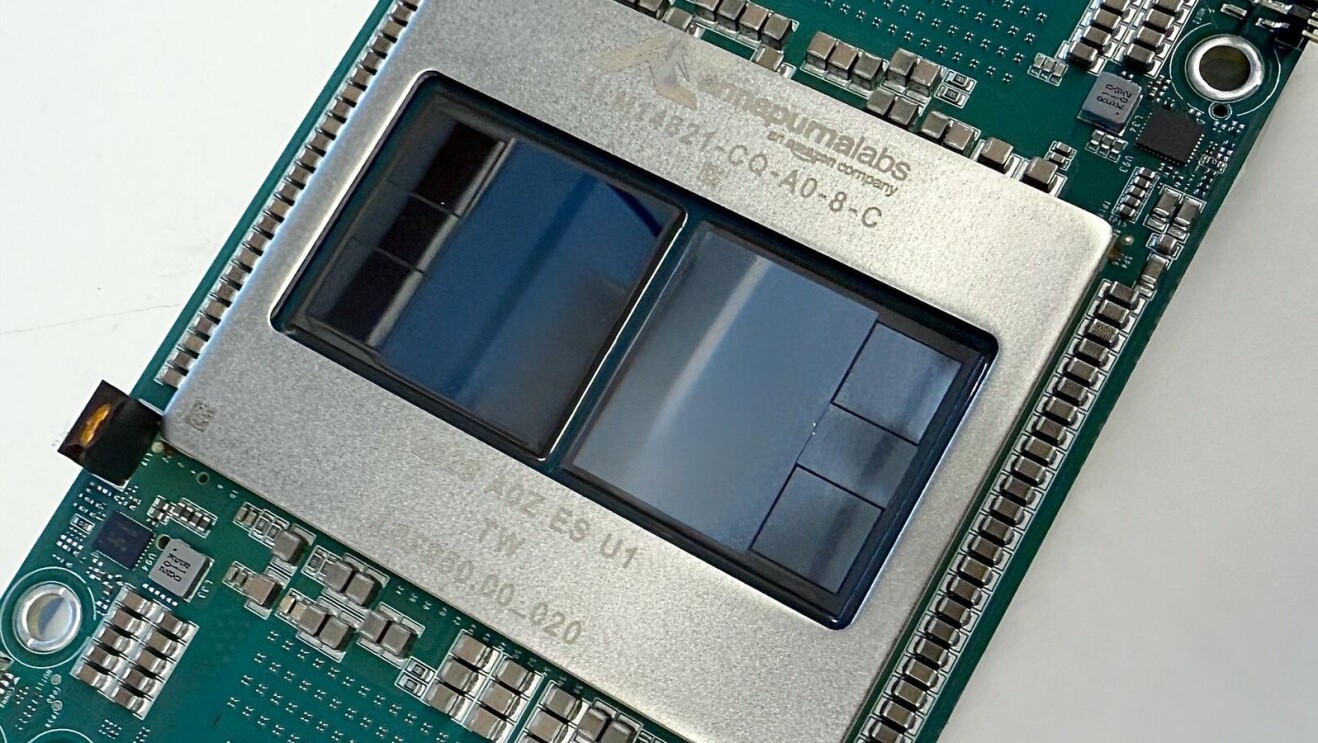

For customers who want to deploy Llama 3.1 models on AWS through self-managed machine learning workflows for greater flexibility and control of underlying resources, Amazon Elastic Compute Cloud (Amazon EC2) accelerated computing provides a broad choice of compute options. AWS Trainium and AWS Inferentia2 enable high performance, cost-effective fine-tuning, and deployment for Llama 3.1 models on AWS. Customers can get started with Llama 3.1 on AWS AI chips using Amazon EC2 Trn1 and Inf2 instances.

Customers are already using Llama models on AWS

- Nomura, a global financial services group spanning 30 countries and regions, is using Llama models in Amazon Bedrock to simplify the analysis of dense industry documents to extract relevant business information, empowering employees to focus more time on drawing insights and deriving key intel from data sources like log files, market commentary, or raw documents.

- TaskUs, a leading provider of outsourced digital services and customer experiences, uses Llama models in Amazon Bedrock to power TaskGPT, a proprietary generative AI platform, on which TaskUs builds intelligent tools that automate parts of the customer service process across channels, freeing up teammates to address more complex issues and delivering better customer experiences overall.

Amazon Bedrock and Amazon SageMaker are part of distinct layers of the “generative AI stack.” Find out what this means, and why Amazon is investing deeply across all three layers.

To learn more about how AWS AI chips deliver high performance and low cost for Meta Llama 3.1 models on AWS, visit the AWS Machine Learning Blog and take a look inside the lab where AWS makes custom chips.

Trending news and stories