December 6, 2024 10:01 AM

That’s a wrap on AWS re:Invent 2024

That's a wrap on AWS re:Invent 2024 in Las Vegas, Nevada. Generative AI was again a major focus, with Amazon Web Services (AWS) CEO Matt Garman, AWS Vice President of Data and AI Swami Sivasubramanian, and special guest Amazon CEO Andy Jassy announcing a range of innovations, as well as sharing behind-the-scenes-insights. Announcements included Amazon Nova foundation models, availability of Trainium2 instances, the launch of Trainium3, new capabilities for Amazon Q, and more.

December 5, 2024 4:16 PM

Canva, Too Good To Go reveal how AWS enabled global expansion

Two AWS customers took to the re:Invent stage Thursday morning with Amazon CTO Dr. Werner Vogels, sharing how AWS helped them on their journeys in managing complexity.

Brendan Humphreys, CTO, Canva

Brendan Humphreys, CTO, CanvaHow AWS powers 450 Canva designs per second

Brendan Humphreys, CTO of Canva, highlighted how AWS has been instrumental in the company’s growth. Founded just over a decade ago, Canva has become a global design powerhouse, boasting over 220 million users across 190 countries. Today, the platform handles 1.2 million requests a day and sees 450 new designs created every second. Humphreys revealed how Canva’s architecture evolved from a simple monolith running on EC2, to a sophisticated microservices architecture. AWS services like Amazon RDS MySQL and later Amazon DynamoDB enabled Canva to scale seamlessly.

Brendan Humphreys, CTO of Canva, highlighted how AWS has been instrumental in the company’s growth. Founded just over a decade ago, Canva has become a global design powerhouse, boasting over 220 million users across 190 countries. Today, the platform handles 1.2 million requests a day and sees 450 new designs created every second. Humphreys revealed how Canva’s architecture evolved from a simple monolith running on EC2, to a sophisticated microservices architecture. AWS services like Amazon RDS MySQL and later Amazon DynamoDB enabled Canva to scale seamlessly.

Humphreys also highlighted how AWS is enabling Canva to build a thriving ecosystem for developers. With tools like Apps SDK for building embedded functionality within Canva, and the Connect API for integrating Canva’s capabilities into other platforms, Canva has fostered a thriving marketplace with over 300 apps created by thousands of developers.

How AWS helps Too Good To Go save four meals per second

Robert Christiansen, VP of Engineering at Too Good To Go, the world’s largest marketplace for surplus food, explained how AWS enabled the company to scale from a simple idea to a platform with over 100 million users worldwide. As Too Good To Go grew rapidly, AWS provided crucial solutions to keep its operations running smoothly. AWS’s global infrastructure allowed for seamless expansion into new markets like North America and Australia without building separate apps or infrastructure for each region.

Robert Christiansen, VP of Engineering at Too Good To Go, the world’s largest marketplace for surplus food, explained how AWS enabled the company to scale from a simple idea to a platform with over 100 million users worldwide. As Too Good To Go grew rapidly, AWS provided crucial solutions to keep its operations running smoothly. AWS’s global infrastructure allowed for seamless expansion into new markets like North America and Australia without building separate apps or infrastructure for each region.

With AWS services like Amazon SNS Topics and SQS queues, the company can efficiently spread updates across different regions, ensuring a consistent experience for users whether they’re in Copenhagen or Cleveland. This cloud-powered efficiency translates directly to impact: Too Good To Go saves four meals per second, chipping away at the 40% of food produced that’s currently being wasted every year. It’s a powerful example of how cloud technology can support not just business growth but also critical environmental initiatives.

December 5, 2024 3:30 PM

Scale your impact with the Now Go Build CTO Fellowship

Closing his AWS re:Invent keynote Thursday, Amazon CTO Werner Vogels said it was technologists’ responsibility “to help solve some of the world’s hardest problems.”

It’s why, last year, he set up the Now Go Build CTO Fellowship in collaboration with AI for Changemakers led by Tech To The Rescue and the AWS Social Responsibility and Impact team, aimed at CTOs and senior engineering leaders whose organizations are driving positive change through AI and cloud technology.

This fellowship is tailored for aspiring tech leaders in nonprofits and social impact organizations—providing fellows with training, mentorship, and access to AWS experts to accelerate their growth and the development of tech solutions that can make a profound difference in areas such as disaster management, climate, health and beyond.

It’s not just about sharpening technical skills; it’s about expanding your leadership abilities, scaling your impact, and being part of a community that’s redefining how technology can change the world. For more information and how to apply, visit Vogels’ blog.

December 5, 2024 2:45 PM

Amazon CTO shares his 6 tips for managing complexity

Amazon CTO Dr Werner Vogels looked back on his 20-year career at the company during his re:Invent keynote Thursday, and reflected on how AWS has continued to innovate to solve complex problems for customers.

Complexity “sneaks in” all the time, said Vogels. It’s inevitable as systems become more complicated, and in general complexity is a good thing, because it probably means you’re adding more functionality. The key to dealing with it is how you manage it.

Vogels shared his 6 lessons with the re:Invent audience for dealing with complexity, or rather lessons in “simplexity” that he’s learned at Amazon over the year.

Amazon Chief Technology Officer Dr. Werner Vogels

Amazon Chief Technology Officer Dr. Werner Vogels1. Make evolvability a requirement

Your systems will grow, and you will need to revisit the architecture choices you’ve made. Vogels told the audience that when the team started building Amazon Simple Storage S3 “we knew we wouldn’t be using same architecture in a year from now.” Constructing architecture that can evolve in a controllable way is crucial in order to have the ability to easily accommodate future changes. Evolvability differs from maintainability, he pointed out. Evolvability is long-term strategy to deal with complexity over time, while maintainability is about making sure the system is working properly in the short term. With Amazon S3, he said, AWS has continued adding new functionality without any impact for customers—never compromising on the core attributes while moving customers along the way without them even noticing.

2. Break complexity into pieces

Vogels said Amazon CloudWatch (a service that monitors AWS applications, responds to performance changes, optimizes resource use, and provides insights into operational health) is a great example of how AWS has taken a massive service and disaggregated it into smaller building blocks that can evolve over time. As the system started to grow, it took on a new level of complexity. By breaking it into a series of low coupled, smaller components, with high cohesion and well-defined APIs so they can easily talk to each other, AWS has been able to provide a very simple front-end service that’s been rewritten and rewritten over time to offer customers new features with no disruption.

3. Align organization to architecture

Andy Warfield, AWS vice president and distinguished engineer, joined Vogels on stage to explain lesson 3: how to align your organization to your architecture. Amazon S3 has been around for 18 years, he said, and although we’re still learning every day, but there are things we get right. As you build more and more complex systems, he said, you have to acknowledge that your organization usually gets at least as complex as the software you’re building. The first key to building successful teams is not being complacent. Even when things are going well, you still need to be looking out for what could go wrong, and keep asking questions. It’s this spirit of constructively challenging the status quo, “poking holes and finding stuff” that has helped the Amazon S3 team to succeed in its mission to provide durability for customers. The second, said Warfield, is to focus on ownership. Bring your teams a problem, and give them agency and the space to go solve it. It’s the leader’s job to give teams this agency, but maintain a sense of urgency to help them deliver on time.

4. Organize into cells

As you start to become more successful and things start to grow, any disturbance in operations can impact customers, said Vogels, and managing this can be highly complex. By decomposing services into cell-based architectures, you can isolate issues without impacting other units. Ideally, a cell should be so big that it can handle the biggest workload you can imagine, but also small enough to test at full scale. It’s always a balancing act, but ultimately it will help over time to maintain reliability and security for your customers, and reduce the scope of impact for your customers.

5. Design predictable systems

Build a system that’s highly predictable, said Vogels, and you will reduce the impact of uncertainty. An event-driven architecture can be valuable in dealing with this situation. Take Amazon Route 53, which provides highly available and scalable Domain Name System (DNS), domain name registration, and health-checking cloud services. Instead of continuously reconfiguring the DNS database, focusing on health-checking after requests are received makes the overall processing highly predictable.

6. Automate complexity

Many of the crucial tasks developers and engineers need to get done are often hard work, and just plain boring, said Vogels. But rather than ask “what should we automate?,” the right question, he said is “what don’t we automate?”. That’s why you should automate everything that doesn’t require high judgment. For example, customers can use Amazon Bedrock Serverless Agentic Workflows to create agentic ticket triage systems, where autonomous AI agents, specialized for well-defined use cases, can either resolve an issue themselves, or escalate to a human when necessary.

Ever since AWS began, he said, our mission has been to take on the burden of complexity for customers: we want to take on more and more of that for you, and we’ll continue to do everything we can to make the customer experience as simple as possible.

Vogels ended by urging the members of the audience to share their lessons with each other. He recognized the efforts of AWS Heroes, a worldwide group of AWS experts who go above and beyond to share knowledge and build community.

December 5, 2024 10:21 AM

5 tech predictions for 2025 and beyond, according to Amazon CTO Dr. Werner Vogels

From a new era of energy efficiency to a mission-driven tech workforce, Amazon’s CTO predicts what's coming in 2025.

- The workforce of tomorrow is mission-driven

- A new era of energy efficiency drives innovation

- Technology tips the scales in the discovery of truth

- Open data drives decentralized disaster preparedness

- Intention-driven consumer technology takes hold

December 4, 2024 5:41 PM

AWS unveils new partner innovations at re:Invent 2024

Dr. Ruba Borno, vice president, Global Specialists and Partners, AWS, announced innovations in cloud security and partner collaborations during her keynote at re:Invent Wednesday.

Borno announced the launch of four new security specializations, including the Security AI Category, so customers can identify partners with deep expertise in protecting AI applications and in defending against cyberattacks.

Dr. Ruba Borno, vice president, Global Specialists and Partners, AWS

Dr. Ruba Borno, vice president, Global Specialists and Partners, AWSBorno also announced that Buy with AWS is now generally available. This feature allows partners to integrate AWS Marketplace directly into their websites, enabling customers to discover solutions, request pricing, and make purchases using their AWS accounts.

She announced the preview of Partner Connections, a unified co-sell experience that simplifies deal structures across multiple partners on a single customer project. Additionally, Borno announced enhancements to the Migration Acceleration Program, including the removal of earnings caps on modernization deals.

On stage, several partners shared their latest collaborations, powered by AWS. Leaders from Wiz, a cloud security platform, and AWS partner Ahead discussed their experience with customer TurnItIn, an online plagiarism checker, in leveraging speed while prioritizing security.

Valerie Henderson, president and CRO, Caylent

Valerie Henderson, president and CRO, CaylentCloud consulting company Caylent shared its work with Life360, a location sharing and security app, to support Life360’s mission to keep people safe. And representatives from AWS partner Slalom and biotech company Bayer traced their collaboration to enhance crop science capabilities.

Looking ahead to 2025, AWS will be expanding the strategic role startups play in its partner network, with a new Specialization Program to help startups grow and scale their businesses.

December 4, 2024 3:30 PM

Sound stem separation startup wins $100,000 AWS Unicorn Tank pitch competition

A San Francisco-based startup that specializes in groundbreaking sound stem separation technology took the top prize at the AWS Unicorn Tank pitch competition at re:Invent this afternoon.

AudioShake, which uses AI to recognize different components in a piece of audio and then isolates the sound stems so they can be used for new purposes, was one of eight startups pitching their cutting-edge generative AI ideas to a panel of investors, AI pioneers, and industry leaders.

The competition runner up, voted by the re:Invent audience, was Qodo—a startup that’s building a coding platform for debugging—which walked away with $25,000.

The six other participants were Splash Music, Culminate, Jam & Tea Studios, Unravel Carbon, HeroGuest, and Capitol A.

All eight startups are part of a broader group of 80 from around the world selected by AWS to participate in the AWS Generative AI Startup Accelerator, a global 10-week hybrid program designed to support and propel the next wave of generative AI startups. Each startup receives up to $1 million in AWS credits and has the chance to learn from AWS, as well as program partners such as NVIDIA and Mistral AI.

December 4, 2024 2:19 PM

From highly realistic video to simplified mortgages: how AWS customers are transforming industries with generative AI

Three AWS customers joined Swami Sivasubramanian on stage during his re:Invent keynote Wednesday to share how their generative AI journeys are powered by AWS.

01 / 02

Autodesk revolutionizes 3D design with AWS-powered generative AI

Autodesk, a leader in design and make technology, is revolutionizing the industry with cutting-edge 3D generative AI powered by AWS services. CTO Raji Arasu shared how Autodesk is developing unique generative AI foundation models to address complex challenges in design and creation.

At the forefront of this innovation is Project Bernini, Autodesk’s billion-parameter model that processes diverse inputs like text, sketches, and voxels to generate 2D and 3D CAD geometry. To handle the massive scale of data involved—from individual building designs to entire city developments—Autodesk leverages AWS services including Amazon DynamoDB, EMR, EKS, Glue, and Amazon SageMaker. These tools have dramatically improved Autodesk’s AI development process, cutting foundation model development time in half and increasing AI productivity by 30%.

Luma AI’s Ray 2 model brings real-time video generation to Amazon Bedrock

Luma AI, a pioneer in visual intelligence, is revolutionizing the field of AI-generated video with its groundbreaking model, Luma Ray 2, soon to be available on Amazon Bedrock. CEO Amit Jain shared how the model creates highly realistic, minute-long videos with unprecedented character and story consistency, all at real-time speeds. “It’s like seeing your thoughts appear in front of you as you imagine them,” Jain said.

Trained on approximately 1,000 times more data than even the largest language models, Luma Ray 2 leverages Amazon SageMaker HyperPod for its intensive computational needs. This collaboration is set to bring Luma’s advanced creative AI capabilities to AWS customers worldwide, enabling new possibilities in film, design, and entertainment industries.

AWS Bedrock fuels Rocket Companies’ mission to simplify mortgage process

Rocket Companies CTO Shawn Malhotra shared how the company has developed an AI-driven platform, powered by Amazon Bedrock, which is transforming the traditionally stressful homeownership journey. At the heart of this transformation is an AI-powered chat agent that guides clients throughout their homebuying journey, handling the 70% of interactions autonomously and making clients three times more likely to close a loan. This allows Rocket’s team to focus on addressing more personal questions and delivering a human-centric experience. The AI system, built on AWS, assists with everything from document processing to answering complex questions about mortgage documentation, significantly reducing resolution times for client requests.

December 4, 2024 11:07 AM

AWS announces powerful new capabilities for Amazon Bedrock and biggest expansion of models to date

Dr. Swami Sivasubramanian, vice president of AI and Data at AWS

Dr. Swami Sivasubramanian, vice president of AI and Data at AWSDuring his re:Invent keynote Wednesday, Swami Sivasubramanian, vice president of AI and Data at AWS, announced a range of new innovations for Amazon Bedrock—a fully managed service for building and scaling generative artificial intelligence (AI) applications with high-performing foundation models—that will give customers even greater flexibility and control to build and deploy production-ready generative AI faster. The innovations all reinforce AWS’s commitment to model choice, optimize how inference is done at scale, and help customers get more from their data. They include:

- The broadest selection of models from leading AI companies

- Access to more than 100 popular, emerging, and specialized models with Amazon Bedrock Marketplace

- New Amazon Bedrock capabilities to help customers more effectively manage prompts at scale

- Two new capabilities for Amazon Bedrock Knowledge Bases

- New Amazon Bedrock Data Automation

Amazon Bedrock Marketplace is available today. Inference management capabilities, structured data retrieval and GraphRAG in Amazon Bedrock Knowledge Bases, and Amazon Bedrock Data Automation are all available in preview. Models from Luma AI, poolside, and Stability AI are coming soon.

To learn more, visit: the AWS News Blog: Amazon Bedrock Marketplace, prompt caching and Intelligent Prompt Routing, and data processing and retrieval capabilities.

December 4, 2024 10:14 AM

New $100 million AWS education initiative to support underserved learners

Amazon is committing up to $100 million in cloud credits to help qualifying education organizations build or scale digital learning solutions for learners from underserved and underrepresented communities.

Over the next five years, the new AWS Education Equity Initiative will provide eligible organizations worldwide, including nonprofits, social enterprises, and governments, with cloud computing credits, so they can take advantage of AWS's cloud and AI services to build innovations that can expand access to education and development opportunities to underserved learners. It will also offer technical expertise from AWS's Solution Architects who can help them with architectural guidance, best practices for responsible AI implementation, and ongoing optimization support.

This comprehensive approach will ensure that organizations can make the most of AWS services to ensure learners from all backgrounds have equal access to life-changing opportunities.

December 4, 2024 9:40 AM

New Amazon Bedrock Data Automation

AWS announced Amazon Bedrock Data Automation to help customers automatically extract, transform, and generate data from unstructured content at scale using a single API. This new capability can quickly and cost effectively extract information from documents, images, audio, and videos and transform it into structured formats for use cases like intelligent document processing, video analysis, and RAG. It can also generate content using predefined defaults, like scene-by-scene descriptions of video stills or audio transcripts, or customers can create an output based on their own data schema that they can then easily load into an existing database or data warehouse. Through an integration with Knowledge Bases, Amazon Bedrock Data Automation can also be used to parse content for RAG applications, improving the accuracy and relevancy of results by including information embedded in both images and text. Amazon Bedrock Data Automation provides customers with a confidence score and grounds its responses in the original content, helping to mitigate the risk of hallucinations and increasing transparency.

Amazon Bedrock Data Automation is available in preview.

December 4, 2024 9:39 AM

New capabilities for Amazon Bedrock Knowledge Bases

Amazon Bedrock Knowledge Bases is a fully managed capability that makes it easy for customers to customize foundation model responses with contextual and relevant data using retrieval augmented generation (RAG). While Knowledge Bases already makes it easy to connect to data sources like Amazon OpenSearch Serverless and Amazon Aurora, many customers have other data sources and data types they would like to incorporate into their generative AI applications. That is why AWS is adding two new capabilities to Knowledge Bases.

Support for structured data retrieval accelerates generative AI app development

Knowledge Bases provides one of the first managed, out-of-the-box RAG solutions that enables customers to natively query their structured data where it resides for their generative AI applications. This capability helps break data silos across data sources, accelerating generative AI development from over a month to just days. Customers can build applications that use natural language queries to explore structured data stored in sources like Amazon SageMaker Lakehouse, Amazon S3 data lakes, and Amazon Redshift. With this new capability, prompts are translated into SQL queries to retrieve data results. Knowledge Bases automatically adjusts to a customer’s schema and data, learns from query patterns, and provides a range of customization options to further enhance the accuracy of their chosen use case.

Support for GraphRAG generates more relevant responses

Knowledge graphs allow customers to model and store relationships between data by mapping different pieces of relevant information like a web. These knowledge graphs can be particularly useful when incorporated into RAG, allowing a system to easily traverse and retrieve relevant parts of information by following the graph. Now, with support for GraphRAG, Knowledge Bases can enable customers to automatically generate graphs using Amazon Neptune, AWS’s managed graph database, and link relationships between entities across data, without requiring any graph expertise. Knowledge Bases makes it easier to generate more accurate and relevant responses, identify related connections using the knowledge graph, and view the source information to understand how a model arrived at a specific response.

Structured data retrieval and GraphRAG in Amazon Bedrock Knowledge Bases are available in preview. Learn more about data processing and retrieval capabilities.

December 4, 2024 9:32 AM

New Amazon Bedrock capabilities to help customers more effectively manage prompts at scale

When selecting a model, developers need to balance multiple considerations, like accuracy, cost, and latency. Optimizing for any one of these factors can mean compromising on the others. To balance these considerations when deploying an application into production, customers employ a variety of techniques, like caching frequently used prompts or routing simple questions to smaller models. However, using these techniques is complex and time-consuming, requiring specialized expertise to iteratively test different approaches to ensure a good experience for end users. That’s why AWS is adding two new capabilities to help customers more effectively manage prompts at scale:

Lower response latency and costs by caching prompts

Amazon Bedrock can now securely cache prompts to reduce repeated processing, without compromising on accuracy. This can reduce costs by up to 90% and latency by up to 85% for supported models. For example, a law firm could create a generative AI chat application that can answer lawyers’ questions about document. When multiple lawyers ask questions about the same part of a document in their prompts, Amazon Bedrock could cache that section, so the section only gets processed once and can be reused each time someone wants to ask a question about it. This reduces the cost by shrinking the amount of information the model needs to process each time.

Amazon Bedrock Intelligent Prompt Routing helps optimize response quality and cost

With Intelligent Prompt Routing, customers can configure Amazon Bedrock to automatically route prompts to different foundation models within a model family, helping them optimize for response quality and cost. Using advanced prompt matching and model understanding techniques, Intelligent Prompt Routing predicts the performance of each model for each request and dynamically routes requests to the model most likely to give the desired response at the lowest cost. Intelligent Prompt Routing can reduce costs by up to 30% without compromising on accuracy.

December 4, 2024 9:20 AM

Access to more than 100 popular, emerging, and specialized models with Amazon Bedrock Marketplace

Through the new Amazon Bedrock Marketplace capability, AWS is giving access to more than 100 popular, emerging, and specialized models, so customers can find the right set of models for their use case. This includes popular models such as Mistral AI’s Mistral NeMo Instruct 2407, Technology Innovation Institute’s Falcon RW 1B, and NVIDIA NIM microservices, along with a wide array of specialized models, including Writer’s Palmyra-Fin for the financial industry, Upstage’s Solar Pro for translation, Camb.ai’s text-to-audio MARS6, and EvolutionaryScale’s ESM3 generative model for biology.

Once a customer finds a model they want, they select the appropriate infrastructure for their scaling needs and easily deploy on AWS through fully managed endpoints. Customers can then securely integrate the model with Amazon Bedrock’s unified application programming interfaces (APIs), leverage tools like Guardrails and Agents, and benefit from built-in security and privacy features.

Amazon Bedrock Marketplace is available today.

December 4, 2024 9:20 AM

New in Amazon Bedrock: The broadest selection of models from leading AI companies

Luma AI’s multimodal models and software products advance video content creation with generative AI. AWS will be the first cloud provider to make Luma AI’s state-of-the-art Luma Ray 2 model, the second generation of its renowned video model, available to customers. Ray 2 marks a significant advancement in generative AI-assisted video creation, generating high-quality, realistic videos from text and images with efficiency and cinematographic quality. Customers can rapidly experiment with different camera angles and styles, create videos with consistent characters and accurate physics, and deliver creative outputs for architecture, fashion, film, graphic design, and music.

AWS will also be the first cloud provider to offer access to poolside’s malibu and point models, which excel at code generation, testing, documentation, and real-time code completion. Additionally, AWS will be the first cloud provider to offer access to poolside’s Assistant, which puts the power of poolside’s malibu and point inside of developers’ preferred integrated development environments (IDEs).

Amazon Bedrock will also soon add Stable Diffusion 3.5 Large, Stability AI’s most advanced text-to-image model. This new model generates high-quality images from text descriptions in a wide range of styles to accelerate the creation of concept art, visual effects, and detailed product imagery for customers in media, gaming, advertising, and retail.

Amazon Bedrock is also the only place customers can access the newly announced Amazon Nova models, a new generation of foundation models that deliver state-of-the-art intelligence across a wide range of tasks and industry-leading price performance.

Models from Luma AI, poolside, and Stability AI are coming soon.

December 4, 2024 9:10 AM

New Amazon SageMaker AI capabilities reimagine how customers build and scale generative AI and machine learning models

AWS announced four new innovations for Amazon SageMaker AI (an end-to-end service used by hundreds of thousands of customers to help build, train, and deploy AI models for any use case with fully managed infrastructure, tools, and workflows) to help customers get started faster with popular publicly available models, maximize training efficiency, lower costs, and use their preferred tools to accelerate generative artificial intelligence (AI) model development.

These include three innovations for Amazon SageMaker HyperPod, which helps customers efficiently scale generative AI model development across thousands of AI accelerators, reducing time to train foundation models by up to 40%. Leading startups such as Writer AI, Luma AI, and Perplexity, and large enterprises such as Thomson Reuters and Salesforce, are now accelerating model development thanks to SageMaker HyperPod.

With the three new innovations for SageMaker HyperPod announced today, AWS is making it even easier, faster, and more cost-efficient for customers to build, train, and deploy these models at scale:

New recipes help customers get started faster

Many customers want to take advantage of popular publicly available models, like Llama and Mistral, that can be customized to a specific use case using their organization’s data. However, it can take weeks of iterative testing to optimize training performance, including experimenting with different algorithms, carefully refining parameters, observing the impact on training, debugging issues, and benchmarking performance. To help customers get started in minutes, SageMaker HyperPod now provides access to more than 30 curated model training recipes for some of today’s most popular publicly available models, including Llama3.2 90B, Llama 3.1 405B, and Mistral 8x22B. These recipes greatly simplify the process of getting started for customers, automatically loading training datasets, applying distributed training techniques, and configuring the system for efficient checkpointing and recovery from infrastructure failures.

Flexible training plans make it easy to meet training timelines and budgets

AWS is launching flexible training plans for SageMaker HyperPod, enabling customers to plan and manage the compute capacity required to complete their training tasks, on time and within budget. In just a few clicks, customers can specify their budget, desired completion date, and maximum amount of compute resources they need. SageMaker HyperPod then automatically reserves capacity, sets up clusters, and creates model training jobs, saving teams weeks of model training time. This reduces the uncertainty customers face when trying to acquire large clusters of compute to complete model development tasks. In cases where the proposed training plan does not meet the specified time, budget, or compute requirements, SageMaker HyperPod suggests alternate plans, such as extending the date range, adding more compute, or conducting the training in a different AWS Region, as the next best option. Once the plan is approved, SageMaker automatically provisions the infrastructure and runs the training jobs. Amazon used SageMaker HyperPod to train its newly announced Amazon Nova models, saving months of manual work that would have been spent setting up their cluster and managing the end-to-end process. The team was able to reduce training costs, while improving performance.

Better task governance maximizes accelerator utilization

Increasingly, organizations are provisioning large amounts of accelerated compute capacity for model training. These resources are expensive and limited, so customers need a way to govern usage to ensure their compute resources are prioritized for the most critical model development tasks, including avoiding any wastage or underutilization. With the new SageMaker HyperPod task governance innovation, customers can maximize accelerator utilization for model training, fine-tuning, and inference, reducing model development costs by up to 40%. With a few clicks, customers can easily define priorities for different tasks and set up limits for how many compute resources each team or project can use. Once customers set limits across different teams and projects, SageMaker HyperPod will allocate the relevant resources, automatically managing the task queue to ensure the most critical work is prioritized.

Accelerating model development and deployment using popular AI apps from AWS partners within SageMaker

Many customers use best-in-class generative AI and ML model development tools alongside SageMaker AI to conduct specialized tasks, like tracking and managing experiments, evaluating model quality, monitoring performance, and securing an AI application. However, integrating popular AI applications into a team’s workflow is a time-consuming, multi-step process. The fourth SageMaker innovation announced today is a new capability that removes this friction and heavy lifting for customers by making it easy to discover, deploy, and use best-in-class generative AI and ML development applications from leading partners, including Comet, Deepchecks, Fiddler, and Lakera Guard, directly within SageMaker. SageMaker is the first service to offer a curated set of fully managed and secure partner applications for a range of generative AI and ML development tasks. This gives customers even greater flexibility and control when building, training, and deploying models, while reducing the time to onboard AI apps from months to weeks. Each partner app is fully managed by SageMaker, so customers do not have to worry about setting up the application or continuously monitoring to ensure there is enough capacity. By making these applications accessible directly within SageMaker, customers no longer need to move data out of their secure AWS environment, and they can reduce the time spent toggling between interfaces.

All of the new SageMaker innovations are generally available to customers today. Learn more about Amazon SageMaker.

December 3, 2024 8:35 PM

Amazon CEO Andy Jassy shares his thoughts on 3 big announcements from Tuesday's keynote at re:Invent

Amazon CEO Andy Jassy discussed three major innovations announced during announced during Tuesday's keynote:

- Amazon Nova, our new state-of-the-art foundation models from Amazon deliver frontier intelligence and industry-leading price performance.

- New Trn2 instances, featuring AWS’s newest Trainium2 AI chip, offer 30-40% better price performance than the current generation of GPU-based EC2 instances.

- Aurora DSQL, our new serverless distributed SQL database delivering 4x faster reads and writes than alternatives, with strong consistency and high availability across regions.

December 3, 2024 1:15 PM

AWS CEO shares new advancements in AI chips, foundation models, and more during re:Invent keynote

Matt Garman, AWS CEO

Matt Garman, AWS CEOIn his re:Invent keynote Tuesday morning, AWS CEO Matt Garman highlighted how the company is delivering new innovations in generative artificial intelligence (AI), including new Trainium2 instances, Trainium3 chips, Amazon Nova foundation models, and more.

Three AWS customers also joined Garman on stage to share their latest innovations.

Apple leverages AWS to accelerate AI training

Benoit Dupin, senior director of machine learning and AI, Apple

Benoit Dupin, senior director of machine learning and AI, AppleFor over a decade, Apple has relied on AWS to power many of its cloud-based services. Now, this collaboration is fueling the next generation of intelligent features for Apple users worldwide, according to Benoit Dupin, Apple’s senior director of machine learning and AI.

One of the latest chapters of this collaboration is Apple Intelligence, a powerful set of features integrated across iPhone, iPad, and Mac devices. This personal intelligence system understands users and helps them work, communicate, and express themselves in new and exciting ways. From enhanced writing tools to notification summaries and even image creation, Apple Intelligence is reshaping what we can do with our devices. It is powered by Apple’s own large language models, diffusion models, and adapters and runs both locally on Apple devices and on Apple’s own servers with Private Cloud Compute.

To build and train this system requires immense computing power and infrastructure and that’s where AWS comes in. Apple leverages AWS services across many phases of their AI and ML lifecycle. From fine-tuning models to optimization and building the final adapters ready for deployment, AWS provides the scalable, efficient, and high-performing accelerator technologies that Apple needs. This support has been crucial in helping Apple scale its training infrastructure to meet the demands of innovation.

Beyond Apple Intelligence, Apple has found many other benefits leveraging the latest AWS services. By moving from x86 and G4 instances to Graviton and Inferentia2 respectively, Apple has achieved over 40% efficiency gains in some of its machine learning services.

“AWS’ services have been instrumental in supporting our scale and growth,” Benoit said. “And, most importantly, in delivering incredible experiences for our users.”

How JPMorgan Chase is improving the customer experience with AWS

Lori Beer, CEO, JPMorgan Chase

Lori Beer, CEO, JPMorgan ChaseJPMorgan Chase CEO Lori Beer told the re:Invent audience the company is using AWS to unlock business value, as well as open up new opportunities with generative AI.

More than 5,000 data scientists at JPMC use Amazon SageMaker every month to develop AI models, as part of work to build an AI platform that could improve various banking services, including potentially reducing customer wait times and improving customer service quality. Beer said JPMC is exploring Amazon Bedrock's large language models to enable new services, such AI-assisted trip planning for customers, or to help bankers generate personalized outreach ideas to better engage with customers.

In 2022, JPMorganChase started using AWS Graviton chips and saw increased performance benefits. By 2023, the company had nearly 1,000 applications running on AWS, including core services like deposits and payments. The company’s decision to build its data management platform Fusion on AWS is helping it quickly process large amounts of market information—enabling JPMC customers to analyze market trends faster.

PagerDuty helps customers respond to incidents more effectively using AWS generative AI

Jennifer Tejada, chairperson and CEO, PagerDuty

Jennifer Tejada, chairperson and CEO, PagerDutyPagerDuty, a global leader in digital operations management, is using AWS to help its customers—including banks, media streaming services, and retailers—employ generative AI when help them better respond, recover, and learn from, service and operational incidents such as unplanned downtime.

PagerDuty chairperson and CEO Jennifer Tejada said the company’s new PagerDuty Advance features use AWS services including Amazon Q Business, Amazon Bedrock, and Amazon Bedrock Guardrails to power AI-assisted incident response workflows such as intelligent chat-based context and answers. Incident responders can more easily get answers to questions like, “What’s the customer impact?” Or, “What’s changed?” By integrating with Amazon Q Business, said Tejada, PagerDuty has been able to centralize data, reducing the need for responders to switch between multiple third-party application, while AWS responsible AI safeguards help to enforce accuracy.

December 3, 2024 10:59 AM

AWS strengthens Amazon Bedrock with industry-first AI safeguard, new agent capability, and model customization

AWS CEO Matt Garman announced a host of new capabilities for Amazon Bedrock that will help customers solve some of the top challenges the entire industry is facing when moving generative AI applications to production, while opening up generative AI to new use cases that demand the highest levels of precision.

The new capabilities for Amazon Bedrock—a fully managed service for building and scaling generative artificial intelligence (AI) applications with high-performing foundation models—will help customers prevent factual errors due to hallucinations, orchestrate multiple AI-powered agents for complex tasks, and create smaller, task-specific models that can perform similarly to a large model at a fraction of the cost and latency.

Prevent factual errors due to hallucinations

Even the most advanced, capable models can provide incorrect or misleading responses. These “hallucinations” remain a fundamental challenge across the industry, limiting the trust companies can place in generative AI, especially in regulated industries, such as healthcare, financial services, and government agencies, where accuracy is critical. Automated Reasoning checks is the first and only generative AI safeguard that helps prevent factual errors due to hallucinations using logically accurate and verifiable reasoning. By increasing the trust that customers can place in model responses, Automated Reasoning checks opens generative AI up to new use cases where accuracy is paramount. Accessible through Amazon Bedrock Guardrails (which makes it easy for customers to apply safety and responsible AI checks to generative AI applications) Automated Reasoning checks now allows Amazon Bedrock to validate factual responses for accuracy, produce auditable outputs, and show customers exactly why a model arrived at an outcome. This increases transparency and ensures that model responses are in line with a customer’s rules and policies.

Easily build and coordinate multiple agents to execute complex workflows

AI-powered agents can help customers’ applications take actions (for example, helping with an order return or analyzing customer retention data) by using a model’s reasoning capabilities to break down a task into a series of steps it can execute. Amazon Bedrock Agents makes it easy for customers to build these agents to work across a company’s systems and data sources. While a single agent can be useful, more complex tasks, like performing financial analysis across hundreds or thousands of different variables, may require a large number of agents with their own specializations. However, creating a system that can coordinate multiple agents, share context across them, and dynamically route different tasks to the right agent requires specialized tools and generative AI expertise that many companies do not have available. That is why AWS is expanding Amazon Bedrock Agents to support multi-agent collaboration, empowering customers to easily build and coordinate specialized agents to execute complex workflows.

Create smaller, faster, more cost-effective models

Even with the wide variety of models available today, it’s still challenging for customers to find one with the right mix of knowledge, cost, and latency that’s best suited to the unique needs of their business. Larger models are more knowledgeable, but cost more and take longer to respond, while small models are faster and cheaper to run, but are not as capable. Model distillation is a technique that transfers the knowledge from a large model to a small model, while retaining the small model’s performance characteristics. To do this usually requires specialized machine learning (ML) expertise, however with Amazon Bedrock Model Distillation, any customer can now distill their own model with no ML expertise required. Customers simply select the best model for a given use case and a smaller model from the same model family that delivers the latency their application requires at the right cost. After the customer provides sample prompts, Amazon Bedrock will do all the work to generate responses and fine tune the smaller model. If needed, it can even create more sample data to complete the distillation process. This gives customers a model with the relevant knowledge and accuracy of the larger model, but the speed and cost of the smaller, making it ideal for production use cases such as real-time chat interactions. Model Distillation works with models from Anthropic, Meta, and the newly announced Amazon Nova Models.

Automated Reasoning checks, multi-agent collaboration, and Model Distillation are all available in preview.

For more details on today’s announcements visit the AWS blog: Automated Reasoning checks, multi-agent collaboration, and Model Distillation.

December 3, 2024 10:51 AM

Next generation of Amazon SageMaker to deliver unified platform for data, analytics, and AI

At re:Invent today AWS CEO Matt Garman unveiled the next generation of Amazon Sagemakerbringing together the capabilities customers need for fast Structured Query Language (SQL) analytics, petabyte-scale big data processing, data exploration and integration, machine learning (ML) model development and training, and generative artificial intelligence (AI) in one integrated platform.

Today, hundreds of thousands of customers use Amazon SageMaker to build, train, and deploy machine learning (ML) models, and many also rely on AWS’s comprehensive set of purpose-built analytics services to support a wide range of workloads. Increasingly, customers are using these services in combination, and would benefit from a unified environment that brings together familiar tools for things like analytics, ML, and generative AI, along with easy access to all of their data and the ability to easily collaborate on data projects with other members of their team or organization.

That’s why AWS is launching the next generation of Amazon Sagemaker, with the following new features and capabilities:

SageMaker Unified Studio

This new unified studio offers a single data and AI development environment that makes it easy for customers to find and access data from across their organization. It brings together purpose-built AWS analytics, machine learning (ML), and AI capabilities so customers can act on their data using the best tool for the job across all types of common data use cases, assisted by Amazon Q Developer along the way.

SageMaker Catalog

Amazon SageMaker Catalog and its built-in governance capabilities allow the right users to access the right data, models, and development artifacts for the right purpose, enabling customers to meet enterprise security needs. Built on Amazon DataZone, SageMaker Catalog enables administrators to easily define and enforce permissions across models, tools, and data sources, while customized safeguards help make AI applications secure and compliant. Customers can also safeguard their AI models with data classification, toxicity detection, guardrails, and responsible AI policies within Amazon SageMaker.

SageMaker Lakehouse

Many customers may have data spread across multiple data lakes, as well as a data warehouse, and would benefit from an easy way to unify it. Amazon SageMaker Lakehouse provides unified access to data stored in Amazon Simple Storage Service (Amazon S3), data lakes, Redshift data warehouses, and federated data sources—reducing data silos and making it easy to use their preferred analytics and ML tools on their data, no matter how and where it is physically stored. With this new Apache Iceberg-compatible lakehouse capability in Amazon SageMaker, customers can access and work with all of their data from within SageMaker Unified Studio, as well as with familiar AI and ML tools or query engines compatible with Apache Iceberg open standards. (Apache Iceberg is a distributed, community-driven, Apache 2.0-licensed, 100% open-source data table format that helps simplify data processing on large datasets stored in data lakes.) SageMaker Lakehouse provides integrated, fine-grained access controls that are consistently applied across the data in all analytic and AI tools in the lakehouse, enabling customers to define permissions once and securely share data across their organization.

Zero-ETL integrations with SaaS applications

Traditionally, if customers wanted to connect all of their data sources to discover new insights, they’d need—in computing parlance—to “extract, transform, and load” (ETL) information in what was often a tedious and time-consuming manual effort. That’s why AWS has invested in a ‘zero-ETL’ future, where data integration is no longer arduous, and customers can easily get their data where they need it. In addition to operational databases and data lakes, many customers also have critical enterprise data stored in Software as a Service (SaaS) applications, and would benefit from easy access to this data for analytics and ML. The new zero-ETL integrations with SaaS applications make it easy for customers to access their data from applications such as Zendesk and SAP in SageMaker Lakehouse and Redshift for analytics and AI—removing the need for data pipelines, which can be challenging and costly to build, complex to manage, and prone to errors that may delay access to time-sensitive insights.

The next generation of Amazon SageMaker is available today. SageMaker Unified Studio is currently in preview and will be made generally available soon. To learn more, visit the AWS News blog.

December 3, 2024 10:49 AM

Customers scale use of Amazon Q Business as new innovations transform how employees work

AWS announced new capabilities and continued momentum for Amazon Q Business, the most capable generative artificial intelligence (AI)-powered assistant for finding information, gaining insight, and taking action at work.

The new capabilities offer customers better insights across Amazon Q Business and Amazon Q in QuickSight, enhance cross-app generative AI experiences, provide more than 50 actions for popular business applications, and make it easy to automate complex workflows—enabling employees to get more tedious, time-consuming work done faster.

Amazon Q Business is built from the ground up with security and privacy in mind, using a company’s existing identities, roles, and access permissions to personalize interactions with each user. Customers across industries and of all sizes are using Amazon Q Business, from manufacturing companies streamlining maintenance, to HR teams helping employees more easily navigate their benefit programs, and marketing teams creating content in a fraction of the time. Amazon Q Business is also helping employees across Amazon work more efficiently, including generating more than 100,000 account summaries for the AWS Sales team, and reducing the time developers spend churning on technical investigations by more than 450,000 hours.

The new Amazon Q Business features and capabilities announced today double down on some of the things Amazon Q Business does best, such as helping employees find information and work more efficiently with their preferred tools, while also providing a foundation that will make other generative AI experiences smarter.

Amazon Q unites data sources across a company to learn everything about what makes that company unique, allowing it to provide contextually relevant answers to employees by factoring in details such as a company’s core concepts, organization, and structure. To accomplish this, customers connect Amazon Q to more than 40 enterprise data sources, including Amazon Simple Storage Service (Amazon S3), Google Drive, and SharePoint, along with wikis and internal knowledge bases. Amazon Q then creates an ‘index’, to serve as a canonical source of content and data across an organization, and keeps that index up-to-date and secure. AWS is now expanding the type of data that can be indexed and how it is used to power more tailored experiences.

Unified insights across Q Business and Q in QuickSight

Lots of critical business data is stored in databases, data warehouses, and data lakes. To access this data, employees use powerful business intelligence (BI) tools such as Amazon QuickSight. Since decisions are rarely made with one kind of data alone, employees could be much more efficient if they could access this information along with unstructured data contained in documents, wikis, emails, and more, all in one place. Amazon Q Business and Amazon Q in QuickSight can now provide insight and analysis across all of these sources from either tool, so employees can streamline their workflows and accelerate decision making. Now employees working with Amazon Q, regardless of whether it is through a standalone app, embedded in a website, or through an app created with Amazon Q Apps, can get answers that include rich visuals, charts, and graphs from Amazon QuickSight.

Independent software vendor integration with the Amazon Q index

Customers can now grant independent software vendors (ISVs) access to enhance their generative AI-powered experiences with data from multiple applications, using a single application programming interface (API) to access the same index used by Amazon Q. This new ‘cross-app index’ means employees can benefit from more powerful, personalized experiences, which bring in more context from the other applications across their organization. With fine-grained permissions, customers remain fully in control of their data at all times and can grant specific ISVs access based on their needs. Additionally, customers can reduce their security risks by having AWS manage a single index on their behalf, eliminating the need for each application to make their own copy. This empowers employees to get better insights across all of their enterprise information and benefit from more personalized generative AI-powered experiences in third-party applications. Integrations with the Amazon Q index are available today through popular business applications such as Zoom, Asana, Miro, and PagerDuty, with SmartSheet and others available soon.

Library of more than 50 new actions to help with tasks

Employees waste a significant amount of time just trying to get work done across various different applications and systems. To streamline workflows, Amazon Q allows employees to take actions, such as creating an issue in Jira or a ticket in Zendesk, within the enterprise applications they use every day. Now, Amazon Q users can access a library of more than 50 new actions, allowing them to perform specific tasks across popular third-party productivity applications, such as Google Workspace, Microsoft 365, Smartsheet, and more—from creating a task in Asana to sending a private message in Teams.

New automation capability for complex workflows

AWS is also introducing a new capability to Amazon Q that uses generative AI agents to execute more complex workflows, such as processing invoices, managing customer support tickets, and onboarding new employees.

Together, the library of new actions and the new automation capability will empower employees to automate both simple and complex tasks across teams and applications, simply by having a conversation with Amazon Q.

Accessing Amazon Q Business data from Amazon Q in QuickSight, the cross-app index, and 50 new actions are generally available today. Accessing Amazon Q in QuickSight data from Amazon Q Business is in preview. The new automation capability is coming in 2025.

For more details on today’s announcements visit the AWS News Blog on the cross-app index, the 50 new actions and the automation capability.

December 3, 2024 10:15 AM

Amazon Q Developer reimagines how developers build and operate software with generative AI

AWS announced new enhancements to Amazon Q Developer, the most capable generative AI assistant for software development, including agents that accelerate unit testing, documentation, and code reviews, and an operational capability that helps operators and developers of all experience levels investigate and resolve operational issues across their AWS environment in a fraction of the time.

Amazon Q Developer can speed up a variety of software development tasks by up to 80%, already providing the highest reported code acceptance rate of any coding assistant that suggests multi-line code, code security scanning that outperforms leading publicly benchmarkable tools, and high-performing AI agents that autonomously reason and iterate to achieve complex goals. With today’s enhancements, AWS continues to remove the most tedious aspects of software development, enabling developers to boost productivity, focus on strategic and creative work, and multiply their impact. The new enhancements announced today will help developers:

Get better test coverage in a fraction of the time

Writing unit tests helps to catch potential issues early and ensure that code works as intended, but it is tedious and time-consuming to implement tests across all code. This often results in developers deprioritizing complete test coverage for speed, risking costly rollbacks on deployed code and a compromised customer experience. To reduce this burden on developers, Amazon Q Developer now automates the process of identifying and generating unit tests. Amazon Q Developer uses its knowledge of the entire project to autonomously identify and generate tests and add those tests to the project, helping developers quickly verify that the code is working as expected. Developers can now get complete test coverage with significantly less effort, ship more reliable code, and deliver features faster.

Generate and maintain accurate, up-to-date documentation

After developers write and test their code, they have to create documentation to explain how it works. However, as a project grows, keeping all the details up-to-date is a common pain point and often gets neglected, forcing developers who are new to the codebase to spend significant time figuring out how it works on their own. To remove this heavy lifting, Amazon Q Developer now automates the process of producing and updating documentation, making it easy for developers to maintain accurate, detailed information on their projects. At the same time, equipped with accurate documentation, teammates no longer need to invest hours trying to understand what a piece of code does and can now confidently jump into projects with more meaningful contributions.

Deploy higher quality code with automated code reviews

One of the final steps before deployment is having another developer perform a code review to check that the code adheres to their organization’s quality, style, and security standards. However, it can take days waiting for feedback and going back-and-forth on revisions. To streamline this process and catch more issues sooner, Amazon Q Developer now automates code reviews, quickly providing feedback for developers, so they can stay in flow while maintaining code quality based on engineering best practices. By acting as a first reviewer, Amazon Q helps developers detect and resolve code-quality issues earlier, saving them time on future reviews.

Resolve operational issues quickly

Once an application is written and deployed in production, operational teams work to make sure it is performing as expected. However, it is a trial-and-error process to find and fix issues, and it can take hours of manually sifting through vast amounts of data, prolonging disruptions for customers.

AWS has more operational experience and scale than any other major cloud provider from running the world’s largest and most reliable cloud, and has built that experience into the new operational capability for Amazon Q Developer. Amazon Q Developer now helps operators and developers of all experience levels investigate and resolve operational issues across their AWS environment in a fraction of the time.

Utilizing its deep knowledge of an organization’s AWS resources, it can quickly sift through hundreds of thousands of data points to discover relationships between services, develop an understanding of how they work together, and identify anomalies across related signals. After analyzing its findings, Amazon Q presents users with potential hypotheses for the root cause of the issue and guides users through how to fix it—a combination of capabilities that no other major cloud provider offers. Throughout an investigation, Amazon Q compiles all findings, actions, and suggested next steps in Amazon CloudWatch for the team to collaborate on and learn from to prevent future issues.

Amazon Q Developer is available everywhere developers need it, including the AWS Management Console, through a new integrated offering with GitLab, integrated development environments (IDEs), and more. The three new agentic capabilities are generally available in IDE today and in preview via the new integrated offering with GitLab. The new operational capability is available in preview.

December 3, 2024 10:13 AM

New Amazon Q Developer capabilities accelerate large-scale transformations of legacy workloads

AWS announced new capabilities for Amazon Q Developer—the most capable generative AI assistant for software development—that take the undifferentiated heavy-lifting out of complex and time-consuming application migration and modernization projects, saving customers and partners time and money.

Many organizations have a large number of legacy applications that often require specialized expertise to operate, and are expensive and time-consuming to maintain. Although they want to move away from these technologies, modernizing them can take months or years of tedious work. As a result, organizations stall or postpone projects entirely, to prioritize building new applications and experiences.

Amazon Q Developer makes these kinds of projects easier by using AI-powered agents to automate the heavy lifting involved in upgrading and modernizing—from autonomously analyzing source code, to generating new code and testing it, to executing the change once approved by the customer.

For example, earlier this year, Amazon used Amazon Q Developer to migrate tens of thousands of production applications from older versions of Java to Java 17. This effort saved more than 4,500 years of development work, and achieved performance improvements of $260 million in annual cost savings. Now, AWS is extending this technology to support more large-scale legacy transformation projects.

The new capabilities for Amazon Q Developer will help customers:

- Modernize Windows .NET applications to Linux up to four times faster and reduce licensing costs by up to 40%.

- Transform VMware workloads to cloud-native architectures, converting on-premises network configurations into AWS equivalents in hours instead of weeks.

- Accelerate mainframe modernization by streamlining labor-intensive work like code analysis, documentation, planning, and refactoring applications.

To help customers and partners more efficiently collaborate on large-scale transformation projects, all three of these capabilities are available through a new Amazon Q Developer web application. The VMware and mainframe modernization capabilities are only available through this new experience, while developers can also perform Windows .NET transformations within their IDE.

Learn more about of these new Amazon Q capabilities are available in preview today.

December 3, 2024 9:53 AM

Introducing Amazon Nova

As the next step in our AI journey, we’ve built Amazon Nova, a new generation of foundation models (FMs). With the ability to process text, image, and video as prompts, customers can use Amazon Nova-powered generative AI applications to understand videos, charts, and documents, or generate videos and other multimedia content. The new Amazon Nova models available in Amazon Bedrock include: Amazon Nova Micro, Amazon Nova Lite, Amazon Nova Pro, Amazon Nova Premier, Amazon Nova Canvas, and Amazon Nova Reel. All Amazon Nova models are incredibly capable, fast, cost-effective and have been designed to be easy to use with a customer’s systems and data. Using Amazon Bedrock, customers can easily experiment with and evaluate Amazon Nova models, as well as other FMs, to determine the best model for an application.

Amazon Nova Reel transforms a single image input into a brief video with the prompt: dolly forward.

Amazon Nova Reel transforms a single image input into a brief video with the prompt: dolly forward.December 3, 2024 9:04 AM

New database capabilities announced including Amazon Aurora DSQL—the fastest distributed SQL database

AWS CEO Matt Garman announced new capabilities for Amazon Aurora and Amazon DynamoDB to support customers’ most demanding workloads that need to operate across multiple Regions with strong consistency, low latency, and the highest availability.

The new capabilities are available to customers whether they are using structured query language, or ‘SQL’, for storing and processing information in a relational database, in tabular form, or NoSQL (‘not only SQL’), which refers to non-relational databases that store data in a non-tabular format, rather than in rule-based, relational tables.

Over the years AWS has innovated to provide customers the widest range of high performing and scalable databases, including cloud-native relational database Amazon Aurora, which hundreds of thousands of customers rely on every day. Aurora removes the need for customers to make trade-offs by providing the performance of enterprise grade commercial databases with the flexibility and economics of open source. With today’s announcements, AWS is reimagining the relational database again to deliver strong consistency, global availability, and virtually unlimited scalability, without having to choose between low latency or SQL.

The new capabilities are:

Introducing Amazon Aurora DSQL—the fastest distributed SQL database

This new serverless, distributed SQL database enables customers to build applications with 99.999% multi-Region availability, strong consistency, PostgreSQL compatibility, four times faster reads and writes compared to other popular distributed SQL databases, virtually unlimited scalability, and zero infrastructure management. Aurora DSQL overcomes two historical challenges of distributed databases—achieving multi-Region strong consistency with low latency, and syncing servers with microsecond accuracy around the globe. By solving these, Amazon Aurora DSQL enables customers to build globally distributed applications on an entirely new scale.

Enhancements to Amazon DynamoDB global tables

DynamoDB was the first fully managed, serverless, NoSQL database that transformed what internet-scale applications could achieve by redefining performance and simplifying operations with zero infrastructure management and consistent single-digit millisecond performance at any scale. Today, customers across virtually every industry and size are building and modernizing their critical applications leveraging DynamoDB global tables, a multi-Region, multi-active database that provides 99.999% availability. AWS is now using the same underlying technology leveraged by Aurora DSQL to enhance DynamoDB global tables, adding the option of strong consistency to the highest availability, virtually unlimited scalability, and zero infrastructure management already available in DynamoDB global tables, ensuring customers' multi-Region applications are always reading the latest data without having to change any application code.

Both Amazon Aurora DSQL and multi-Region strong consistency in Amazon DynamoDB global tables are available in preview today. For more information, visit the Amazon Aurora and Amazon DynamoDB pages to learn more about these services.

December 3, 2024 8:47 AM

Latest Amazon S3 innovations to remove complexity of working with data at unprecedented scale

AWS announced new features for Amazon Simple Storage Service (Amazon S3) that make it the first cloud object store with fully-managed support for Apache Iceberg for faster analytics, and the easiest way to store and manage tabular data at any scale. These new features also include the ability to automatically generate queryable metadata, simplifying data discovery and understanding to help customers unlock the value of their data in S3.

Amazon S3 is the leading object store in the world with more than 400 trillion objects, and is used by millions of customers. Apache Iceberg is a distributed, community-driven, Apache 2.0-licensed, 100% open-source data table format that helps simplify data processing on large datasets stored in data lakes. Data engineers use Apache Iceberg because it is fast, efficient, and reliable at any scale and keeps records of how datasets change over time.

The new Amazon S3 features also remove the overhead of organizing and operating table and metadata stores on top of objects for customers, so they can shift their focus back to building with their data. The features are Apache Iceberg table-compatible, so customers can easily query their data using AWS analytics services such as Amazon Athena and Amazon QuickSight, and open-source tools including Apache Spark:

New Amazon S3 Tables—the easiest and fastest way to perform analytics on Apache Iceberg tables

Amazon S3 Tables introduce built-in Apache Iceberg table support and a new bucket type for managing Iceberg tables for data lakes. Specifically optimized for analytics workloads, S3 Tables deliver up to three times faster query performance and up to ten times higher transactions per second (TPS) compared to general purpose S3 buckets. S3 Tables automatically manage table maintenance tasks to continuously optimize query performance and storage costs, even as customers’ data lakes scale and evolve. In addition, S3 Tables also provide table-level access controls, allowing customers to define permissions.

New Amazon S3 Metadata—the easiest and fastest way to discover and understand data in S3

As more customers use S3 as their central data repository, and the volume and variety of data have grown exponentially, metadata is becoming an increasingly important way to understand and organize large amounts of data. Many customers use complex metadata capture and storage systems to enrich their understanding of data and find the exact objects they need, but these systems are expensive, time-consuming, and resource-intensive to build and maintain. The new Amazon S3 Metadata automatically generates queryable object metadata in near real-time to help customers query, find, and use data for business analytics, real-time inference applications, and more—accelerating and simplifying data discovery and understanding to help customers unlock the value of their data in S3.

Amazon S3 Tables are generally available today, and Amazon S3 Metadata is available in preview. To learn more, visit the AWS News Blogs S3 Tables and S3 Metadata.

December 3, 2024 8:36 AM

Trainium3 chips—designed for high-performance needs of next frontier of generative AI workloads

AWS announced Trainium3, its next generation AI chip, that will allow customers to build bigger models faster and deliver superior real-time performance when deploying them. It will be the first AWS chip made with a 3-nanometer process node, setting a new standard for performance, power efficiency, and density. Trainium3-powered UltraServers are expected to be four times more performant than Trn2 UltraServers, allowing customers to iterate even faster when building models and deliver superior real-time performance when deploying them. The first Trainium3-based instances are expected to be available in late 2025.

December 3, 2024 8:31 AM

AWS Trainium2 instances now generally available

AWS CEO Matt Garman announced the general availability of AWS Trainium2-powered Amazon Elastic Compute Cloud (Amazon EC2) instances in his re:Invent keynote Tuesday, as well as unveiling new Trn2 UltraServers—a completely new EC2 offering.

Highest performing Amazon EC2 instance for deep learning and generative AI

Amazon EC2 instances are virtual servers that allow software developers with resizable compute capacity for virtually any workload. The new Amazon EC2 Trn2 instances, powered by AWS Trainium2 chips, are purpose built for high-performance deep learning (DL) training of generative AI models, including large language models (LLMs) and latent diffusion models. In fact, Trn2 is the highest performing Amazon EC2 instance for deep learning and generative AI, offering 30-40% better price performance than the current generation of GPU-based EC2 instances. A single Trn2 instance combines 16 Trainium2 chips interconnected with ultra-fast NeuronLink high-bandwidth, and low-latency chip-to-chip interconnect to provide 20.8 peak petaflops of compute—ideal for training and deploying models that are billions of parameters in size.

Trn2 UltraServers meet increasingly demanding AI compute needs of the world’s largest models

For the largest models that require even more compute, Trn2 UltraServers allow customers to scale training beyond the limits of a single Trn2 instance, reducing training time, accelerating time to market, and enabling rapid iteration to improve model accuracy. Trn2 UltraServers are a completely new EC2 offering that use ultra-fast NeuronLink interconnect to connect four Trn2 servers together into one giant server. With new Trn2 UltraServers, customers can scale up their generative AI workloads across 64 Trainium2 chips. For inference workloads, customers can use Trn2 UltraServers to improve real-time inference performance for trillion-parameter models in production. Together with AI safety and research company Anthropic, AWS is building an EC2 UltraCluster of Trn2 UltraServers, named Project Rainier, which will scale out distributed model training across hundreds of thousands of Trainium2 chips interconnected with third-generation, low-latency petabit scale EFA networking—that Anthropic used to train their current generation of leading AI models. When completed, it is expected to be the world’s largest AI compute cluster reported to date available for Anthropic to build and deploy its future models on.

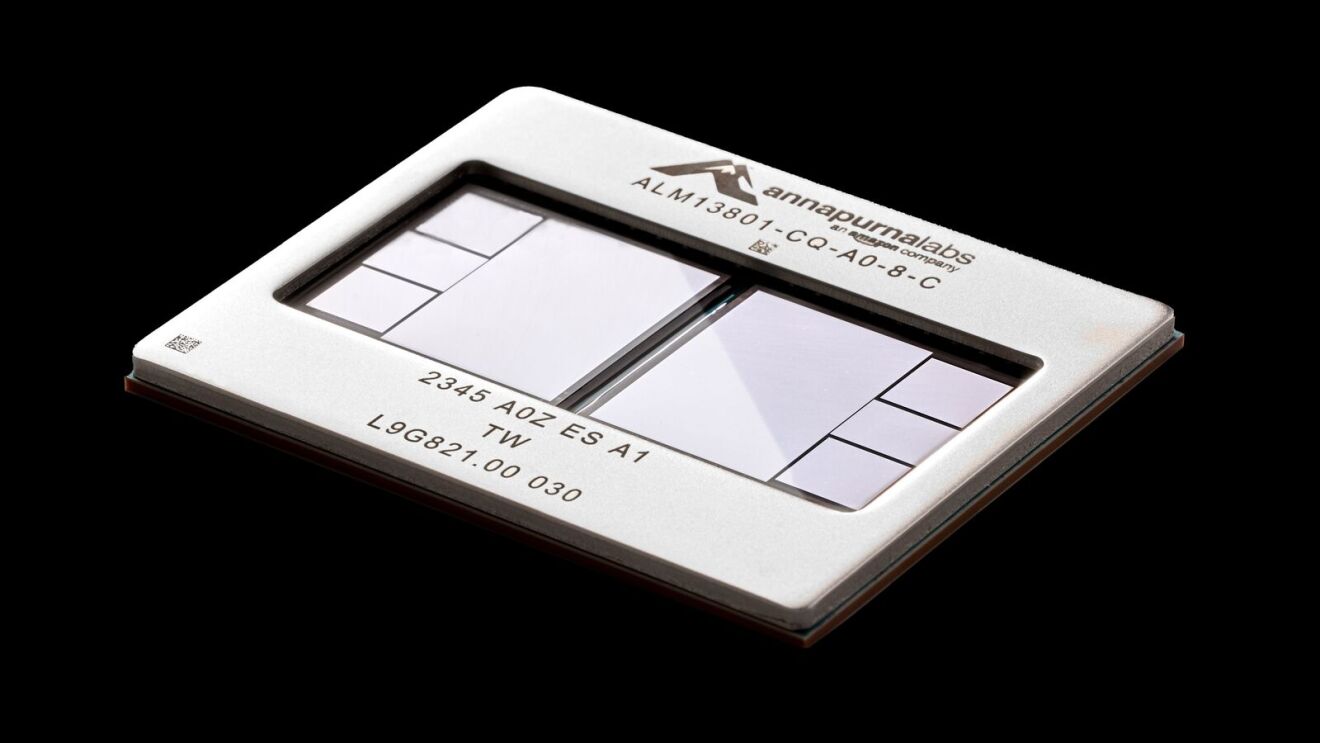

AWS Trainium2 chip

AWS Trainium2 chipTrn2 instances are generally available today in the US East (Ohio) AWS Region, with availability in additional regions coming soon. Trn2 UltraServers are available in preview. Learn more about today's announcements.

December 2, 2024 10:59 PM

Peter DeSantis shows how AWS is innovating across the entire technology stack

Peter DeSantis, senior vice president of AWS Utility Computing, took center stage at re:Invent to deliver his Monday Night Live keynote, continuing the tradition of diving deep into the engineering that powers AWS services.

Joined by guest speakers Dave Brown, vice president, AWS Compute & Networking Services, and Tom Brown, co-founder of AI safety and research company Anthropic, DeSantis revealed how AWS is pushing the boundaries of technology to bring the performance, reliability and low cost of AWS to the most demanding AI workloads.

DeSantis said that the specific needs of AI training and inference workloads represent a new opportunity for AWS teams to invent in entirely different ways—from developing the highest performing chips to interconnecting them with novel technology.

From the advanced packaging and intricate design elements that help pack more compute power and memory into AWS Trainium2 chips, purpose-built for machine learning, to seemingly smaller innovations such as the Firefly Optic Plug—which makes it possible to test all the wiring before servers are installed in a data center—all have made a huge difference to AWS’s ability to deploy AI clusters faster.

According to DeSantis, one of the most unique things about AWS is the amount of time its leaders take getting into the details, so they know what’s happening with customers and can make fast, impactful decisions. One such decision, he said, was to start investing in custom silicon 12 years ago, a move that altered the course of the company’s story in the process.

He told the audience that tonight was “the next chapter” in how AWS is innovating across the entire technology stack to bring customers truly differentiated offerings.

December 2, 2024 1:34 PM

AWS shares data center power usage effectiveness (PUE) numbers for the first time

In addition to announcing new, more energy efficient innovations in data center power, cooling, and hardware design at re:Invent today, AWS has also published its Power Usage Effectiveness (PUE) numbers for the first time ever.

PUE is a metric that measures how efficiently a data center uses energy. A PUE score of 1.0 is considered perfect, indicating that all energy used by the facility goes directly to computing. In 2023, AWS reached a global PUE of 1.15, with its best-performant site registering at PUE of 1.04.

Thanks to efforts to simplify electrical and mechanical designs, adopt innovative cooling systems combining air, use liquid cooling for powerful AI chips, and optimize rack positioning for more efficient energy use, AWS’s new data center components are expected to reach a PUE rating of 1.08. Learn more by visiting AWS’s sustainability webpage.

December 2, 2024 12:39 PM

AWS announces new data center components to support AI and improve energy efficiency