Amazon Web Services (AWS) today announced new Amazon Bedrock innovations that offer customers the easiest, fastest, and most secure way to develop advanced generative artificial intelligence (AI) applications and experiences.

Let’s break them down with illustrations that were created with one of these innovations: Amazon Titan Image Generator (from the surface of Saturn's largest moon Titan, of course).

New Custom Model Import capability helps organizations bring their own models to Amazon Bedrock

In AI, two (or even three) models are often better than one. It’s the compounding intelligence effect.

In AI, two (or even three) models are often better than one. It’s the compounding intelligence effect.Amazon Bedrock customers have increasingly been putting their own data to work by customizing publicly available models for their domain-specific use cases. That’s because there is a compounding intelligence effect when customers combine the smarts from the different foundation models (FMs) and large language models (LLMs) available in Bedrock with their own data. Think of it as generative AI’s version of “two heads (or more) are better than one.” That added intelligence also means the resulting applications are better able to cater to a wider variety of use cases. So what have customers been asking for? An easy and secure way to add their own custom models to Bedrock.

With Amazon Bedrock Custom Model Import, organizations can now import and access their own custom models as a fully managed Application Programming Interface (API) in Bedrock, giving them unprecedented choice when building generative AI applications. To get started, organizations can easily add models to Amazon Bedrock that they customized on Amazon SageMaker, or via another third-party tool or cloud provider. After completing an automated validation process, they can seamlessly access their custom model, like any other on Amazon Bedrock.

With this new capability, AWS makes it easy for organizations to choose a combination of Amazon Bedrock models and their own custom models via the same API. Amazon Bedrock Custom Model Import is available today in preview and supports three of the most popular open model architectures—Flan-T5, Llama, and Mistral—and with plans for more in the future.

Model Evaluation helps customers assess, compare, and select the best model to build and deploy generative AI applications

The delicate balance between model accuracy and model performance is key when it comes to picking your AI models.

The delicate balance between model accuracy and model performance is key when it comes to picking your AI models.Of course, before combining models for more intelligence, customers want to get a keener sense of which models will work best for their specific application. Choosing the best model for a specific use case requires a delicate balance between model accuracy and model performance. Until now, organizations needed to go through this often laborious and time-consuming balancing act for every new model and use case. The result was slower development and delivery of generative AI experiences to their customers.

Now generally available, Model Evaluation is the fastest way for organizations to analyze and compare models on Amazon Bedrock, reducing the time spent evaluating models so they can bring new applications and experiences to market faster. Customers can quickly get started by selecting predefined evaluation criteria (for example, accuracy and robustness) and uploading their own prompt library or selecting from built-in, publicly available datasets. For subjective criteria or content requiring nuanced judgment, customers can easily set up human-based evaluation workflows. Once customers finish the setup process, Amazon Bedrock runs evaluations and generates a report, so customers can understand how the model performed across their key criteria and quickly select the best models for their use cases.

The Amazon Titan family of AI models just grew by two

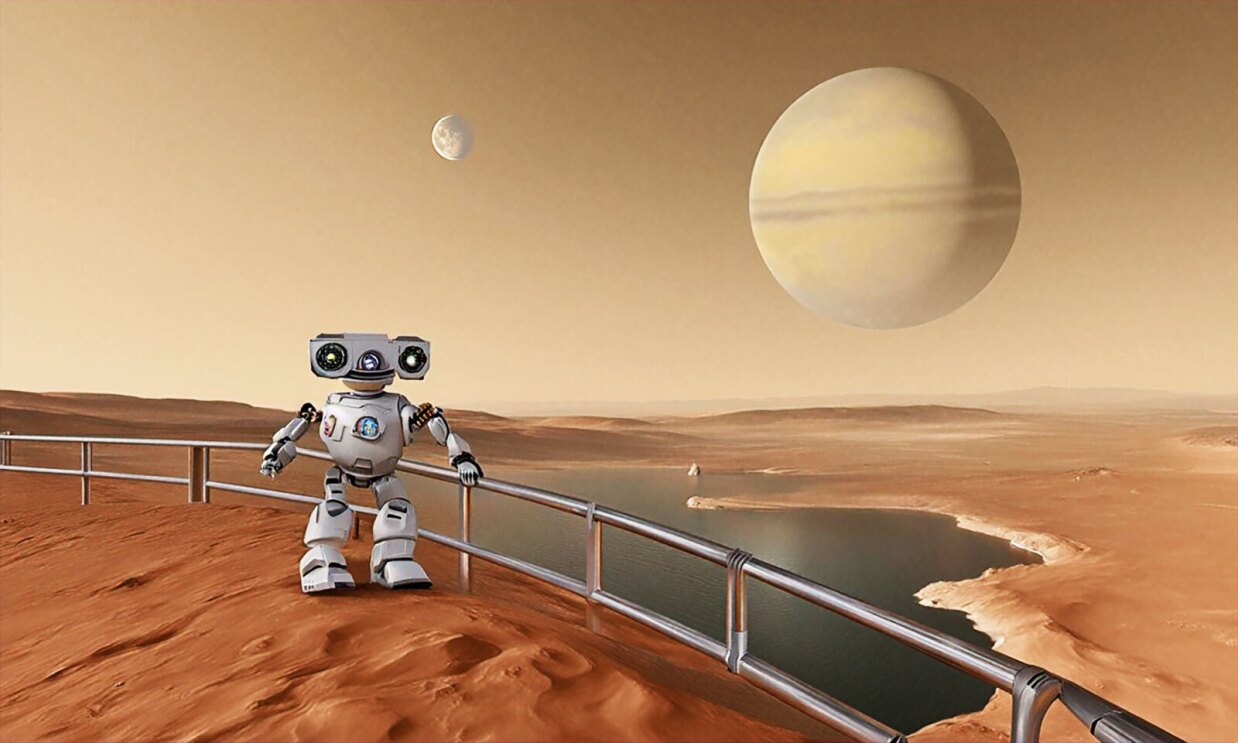

All it takes is simple text to make a beautiful image with Amazon Titan Image Generator.

All it takes is simple text to make a beautiful image with Amazon Titan Image Generator.AWS is pleased to announce the general availability of Amazon Titan Image Generator—now with invisible watermarking—and the latest version of Amazon Titan Text Embeddings, both exclusively on Amazon Bedrock.

Those in industries like advertising, e-commerce, and media and entertainment—among many others—can now access Amazon Titan Image Generator to produce high-quality images from scratch, or enhance and edit existing images, at low cost. All it takes is typing a text description into a prompt field, and Amazon Titan turns that text into whatever image and style you describe. For example, the prompt for the image displayed above was, "From the surface of the moon Titan with Saturn in the background, the text "flower" in modern font emerges from a very friendly robot's mouth, the text then becomes images of sunny flowers on the moon's surface."

Amazon Titan applies an invisible watermark to all images it generates, helping to identify AI-generated images to promote the safe, secure, and transparent development of AI technology and reducing the spread of disinformation. The model can also check for the existence of watermarks, helping customers confirm whether an image was generated by Amazon Titan Image Generator.

The second member of the family announced today is Amazon Titan Text Embeddings V2, which is optimized for working with Retrieval Augmented Generation (RAG) use cases, making it well suited for a variety of use cases such as informational retrieval, question-and-answer chatbots, and personalized recommendations. RAG is a popular model-customization technique wherein the FM connects to additional knowledge sources that it can reference to cull more accurate responses. While the results are ideal, running these operations can be computation- and storage-intensive. With Amazon Titan Text Embeddings V2, launching later in the month, customers have the option to leverage flexible embedding sizes catering to diverse application needs, from low-latency mobile deployments to high-accuracy asynchronous workflows, which reduces overall storage up to four times, while retaining 97% of the accuracy for RAG use cases.

With Guardrails for Amazon Bedrock, customers can easily implement safeguards to remove personal and sensitive information, profanity, specific words, as well as block harmful content

Amazon Bedrock's built-in guardrails keep AI applications safe for everyone.

Amazon Bedrock's built-in guardrails keep AI applications safe for everyone.For generative AI to be pervasive across every industry, organizations must ensure it is implemented in a safe, trustworthy, and responsible way. Many models use built-in controls to filter undesirable and harmful content, but organizations want to further curate models so responses remain relevant, align with company policies, and adhere to responsible AI principles. Now generally available, Guardrails for Bedrock offers industry-leading safety protection, helping customers block up to 85% of harmful content.

To create a guardrail, customers simply provide a natural-language description defining the denied topics within the context of their application. Customers can also configure thresholds to filter out content across areas like hate speech, insults, sexualized language, and violence. This is in addition to filters that will remove profanity, or specific blocked words. Guardrails is the only solution offered by a top cloud provider that allows customers to have built-in and custom safeguards in a single offering, and it works with all large language models in Amazon Bedrock, as well as fine-tuned models.

Tens of thousands of customers have already selected Amazon Bedrock as the foundation for their generative AI strategy because it gives them access to the broadest selection of first- and third-party large language models (LLMs) and other foundation models (FMs) from leading AI companies, including AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon, along with leading ease-of-use capabilities to quickly build and deploy generative AI applications. Amazon Bedrock’s powerful models are offered as a fully managed service so customers don’t need to worry about provisioning compute instances, ensuring seamless deployment, scalability, and continuous optimization.

Trending news and stories